Overview

MDN Ethical Values Statement

Monash DeepNeuron is a student-driven team that seeks to create user-friendly solutions using Artificial Intelligence (AI) and High-Performance Computing (HPC). Over the recent years there has been exponential growth with these emerging technologies, however Monash DeepNeuron acknowledges its capacity to inflict harm if there are no checks and balances in place to ensure that our projects are safe for the community. We uphold ourselves to unwavering ethical standards, to ensure accountability of our members, and accessibility for our users. As a student-run organisation who specialises in this advancing world of technology, ethics are essential to creating a safe environment for potential to thrive, but also for outcomes that will benefit the greater good. Our professional ethics include:

Beneficence

To maintain a high standard of personal conduct, we ensure that our team makes a vow to never do harm, and create something that will not ultimately benefit the community at large. We let our decisions be dictated by the needs of our users, and not for personal advantage. This includes considerations of inclusivity, accessibility, impartiality and objectivity.

Respect

To have consideration towards others, whether they are fellow members of the team or for the public’s interests at heart. We actively aim to foster trust, confidence and consideration from each other and to the public. This includes considerations of human agency, privacy and personal data collection and fairness to prevent prejudice or discrimination bias in our projects.

Reliability

To act dependably and cultivate trust in our organisation by complying with high ethical standards and consistently document progress on projects. Due to the emergence of advancing technology, we ensure that there is accountability and oversight throughout the development stages of the process, to ensure security checks and regulations. Being responsible with confidential information, and being transparent with our practices.

Empowerment

To educate and promote the world of AI to the public in a digestible and accessible manner, so as to improve people’s perception towards emerging technologies. Seeking out and participating in discourse and discussions concerning the topic of AI, and keeping informed about opportunities, risks, and benefits.

Scope and Application

Undergraduate research projects are the cornerstone of Monash DeepNeuron (MDN). They offer the opportunity for student members to develop both their technical skills, in contribution to an existing body of Artificial Intelligence (AI) research, and other relevant competencies which they will take with them into their future workplaces.

One of these ‘soft’ skillsets is the ability to consider the ethical implications of their work and take steps to ensure any risk of harm is minimised. The Ethical Framework for DeepNeuron Research Projects has been designed by the Law & Ethics Committee to provide guidance for technical teams on how to safeguard against ethical risks during the different stages of a project’s development.

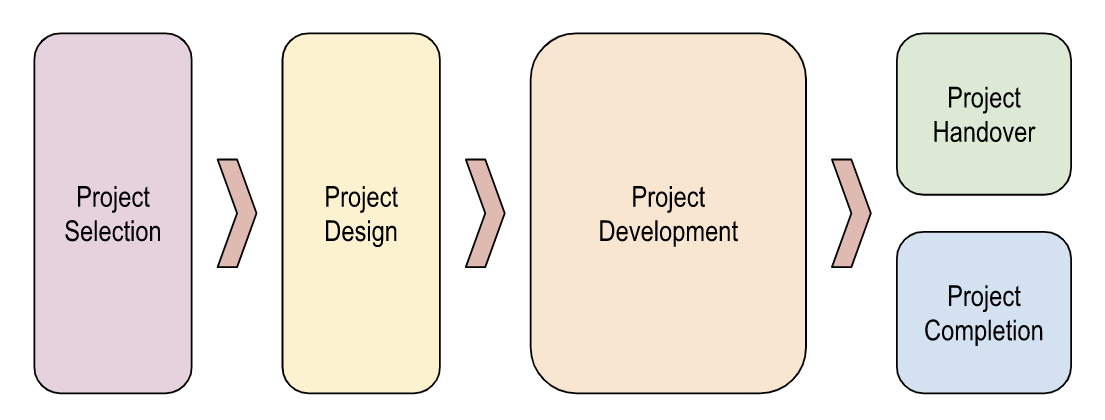

In doing so, the Committee has used the Australian Government’s Artificial Intelligence Ethics Framework to shape an approach to responsible AI development that is tailored to MDN’s specific needs and context. MDN’s Framework integrates these ethical principles into each stage of the project life cycle.

This framework creates responsibilities for Branch Leads and Project Managers in relation to risk management procedures. However, it is not solely for students in these roles. All team members benefit from, and are therefore expected to, understand the ethical approach MDN takes to its research.

General Ethical Guidelines

This section provides a foundation, both for students’ understanding and the framework itself, as to the importance of embedding an ethical approach in AI development.

Progress in the field of AI has the potential to improve many facets of the way our society operates. At the same time, not all impacts upon individuals and the broader community will be anticipated or positive. Any harm caused can vary just as much as the benefits brought (see: Figure 2). Incorporating ethical considerations, from beginning to end stages of a project, can lead to safer and fairer outcomes.

Taking steps to produce these outcomes, in turn, builds public trust in AI and consumer loyalty and overall ensures that all Australians benefit from transformative technologies. For team members, this is a relevant consideration because it relates not only to the social impact of individual MDN projects, but also to the work they may apply their skills to in the course of future employment.

When it comes to pursuing MDN projects in a way that keeps ethical consideration at the forefront, this Framework emphasises the importance of asking questions. The consequences of failing to do so can be serious and detrimental, as seen in the Australian Government’s high-profile attempt at automating debt collection.

CASE STUDY: ‘ROBODEBT’

Algorithmic discrimination is a common problem in the field of AI. The so-called ‘Robodebt scandal’ is a classic and particularly devastating case of this.

Between 2015 and 2019, an online compliance intervention system was used by Services Australia to monitor and investigate Centrelink recipient wages automatically. It was developed by the Commonwealth Department of Social Services, in consultation with other Federal government departments, and its purpose was to ensure the accuracy of recipient income-reporting. This automated system, which became known as the Robodebt Scheme, could producemdboo in one week the same number of discrepancy notices that were produced in a year when done manually.

Yet its use ultimately proved detrimental, giving rise to both a class action against the Federal Government and a Royal Commission investigation after one in five people received incorrect debt collection notices, requiring a total of 470,000 collected payments to be refunded. By overcalculating individuals’ employment earnings during the periods in social security payments were received, the Robodebt system indicated that recipients were overpaid in the relevant periods and thus owed debt to the Commonwealth government.

The Robodebt Scheme is no longer in use. However, the harm it caused was significant and the effects have outlasted the automated system itself. Those eligible for government support commonly come from low socio-economic backgrounds who are already marginalised and disadvantaged. A 2019 Senate Committee Inquiry found that the scheme ‘indiscriminately targeted’ vulnerable demographics in the community, causing significant psychological and financial distress. Among debt notice recipients were 2,000 ‘vulnerable’ Australians who passed away following receipt of debt notices. Sufficient safeguards at every stage are all the more important when automation is intended to serve the interests of vulnerable populations.

As the Royal Commission progresses, accounts from those involved in both development and implementation are beginning to demonstrate a recurring theme: doubts went unraised, questions unasked, and risks ignored. What the Robodebt debacle highlights is that, regardless of seniority or the type of work undertaken by an individual within an organisation, upholding an ethical approach to a project is everyone’s responsibility.

In order to mitigate issues like algorithmic discrimination and allow AI models to flourish to the benefit of its users and their community, the Australian Government has identified eight key principles that should underpin the design, development and implementation of AI systems (see: Figure 3).

This is a form of guidance only. It does not create any legal obligations and it is not something businesses or any other organisations, like student research teams, are bound to follow. However, the principles it suggests are useful as a resource when discussing how best to safeguard against ethical risks to do with AI and as such form the ethical touchpoints considered throughout the MDN project life cycle under this Framework.

Project Selection

This section sets out the ethical consideration process for Branch Leads to follow when selecting projects to take on for the next project cycle.

Each Branch will have its own specific needs and existing approaches to what is required for a project to be selected. This part of the Framework is designed to supplement these things by building in consideration of ethical implications.

To maximise effectiveness, the ethical consideration element of the project selection process is applied once the following have been established for a given project:

- Project and aim – what is it that you are proposing to create?

- Defined scope – what area will you be working in? What is the end goal?

- Proposed tangible outcome – what will be the result of this project?

- Estimated project length – will it run for one project season, or multiple?

In order for a proposed project to be eligible for selection, it must be demonstrated that it will promote and preserve the following principles:

| Respect for human agency | Privacy, personal data protection and data governance | Fairness | Individual, social, and environmental well-being | Transparency | Accountability and oversight |

|---|---|---|---|---|---|

| Human beings must be respected to make their own decisions and carry out their own actions. Respect for human agency encapsulates three more specific principles, which define fundamental human rights: autonomy, dignity and freedom. | People have the right to privacy and data protection and these should be respected at all times. | People should be given equal rights and opportunities and should not be advantaged or disadvantaged undeservedly. | AI systems should contribute to, and not harm, individual, social and environmental wellbeing. | The purpose, inputs and operations of AI programs should be knowable and understandable to its stakeholders. | Humans should be able to understand, supervise and control the design and operation of AI based systems, and the actors involved in their development or operation should take responsibility for the way that these applications function and for the resulting consequences. |

This is demonstrated by a Statement of Compatibility that is prepared by the Branch Lead in consultation with a member of the Law & Ethics Committee. This is a document designed to ensure a proposed project has been reviewed on the basis of its ethical implications before it is selected to proceed to the design phase.

CASE STUDY: ‘CT Facial Reconstruction’

CT Facial Reconstruction was a past Deep Learning project at MDN. The overall aim of the project was to create, implement and train a set of neural network models that accurately generated reconstructed generalised 3D volume images from 3D CT head scans. This model was intended for use in the field of forensic facial reconstruction.

Examining the project overview, there are a number of ethical concerns that may arise. The following principles have been selected to highlight the key concerns.

Privacy, personal data protection and data governance: The data used to train the neural network models included up to 5000 scans from drug overdose victims. This raises concerns of privacy and data protection. It may be unclear whether these deceased individuals had provided consent for their images to be viewed and used for ‘proof of concept’ and training purposes.

Fairness: The model is being trained on large data taken from individuals of caucasian descent. This raises the question of fairness and data bias, as the model may be skewed to recognise and function with identifying caucasian features. This leaves identifying individuals from non-caucasion backgrounds a difficult task. It is noted that the MDN model has been created for conceptual use, instead of practical forensic application.

Reliability and safety: Alongside fairness, the issue of reliability can also arise. The project acknowledged the instability of adversial learning, and the need for other training techniques that will improve model stability and hyperparameter sensitivity. It is important that in real life use, these models have sufficient monitoring and testing to ensure reliability and accuracy.

Project Design

This section sets out an approach to ethical risk management which involves an initial assessment when a project is being designed and produces a tool that can be used throughout the remainder of the project.

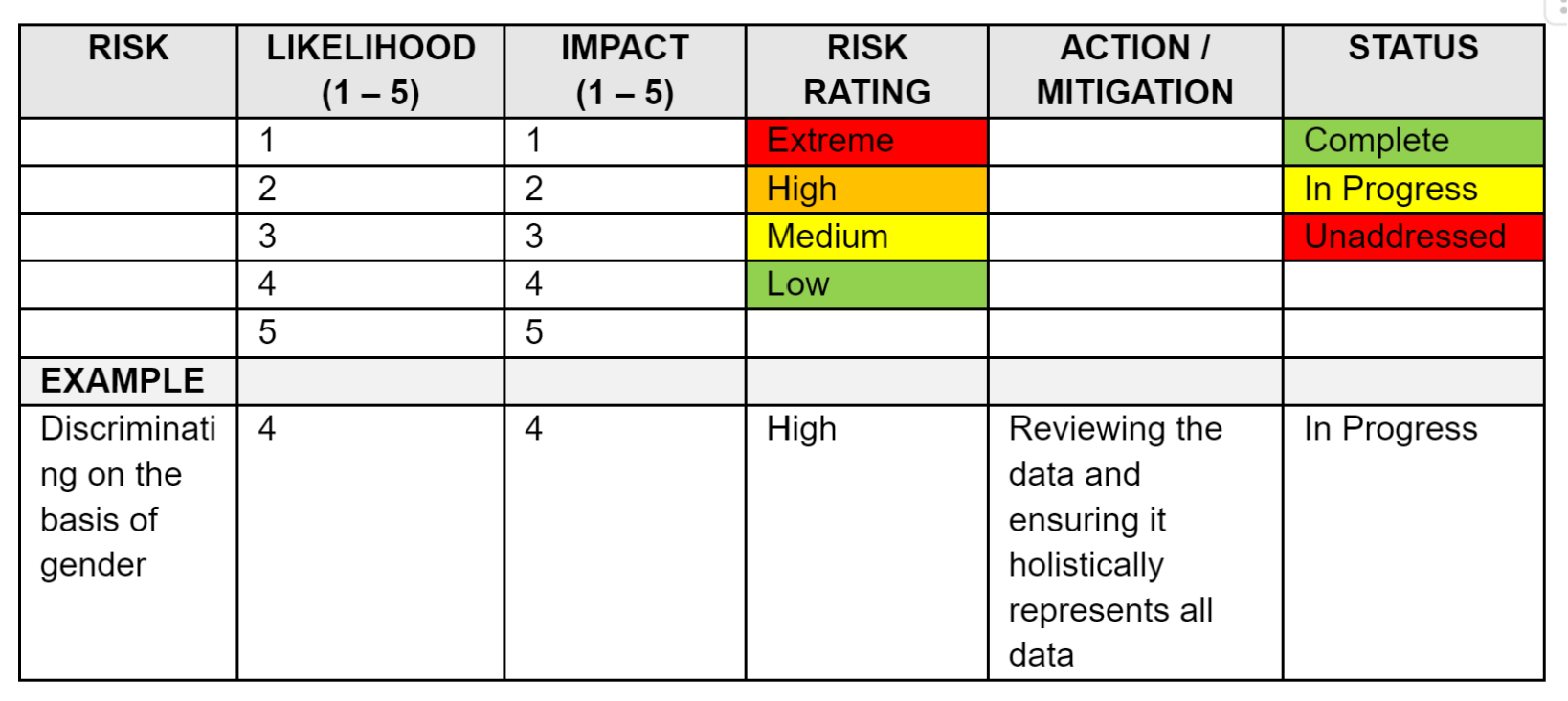

When designing a project, the Branch Lead completes a risk assessment tool in relation to ethical challenges that may be presented by the project at its outset or as its development progresses. This is completed and/or reviewed in consultation with the Law & Ethics Committee.

Identifying Risks

When considering how a project might pose an ethical risk, it is important to account for the fact that AI and computer systems are ‘socio-technical’ in nature, meaning that what might appear to be objective and technical components are nonetheless influenced by social factors during both its creation and implementation.

Below are eight questions that can act as a guide in identifying and assessing ethical risks relating to a project.

| Human, Societal And Environmental Wellbeing Is the platform/product actually providing a social good? |

AI and computer systems should benefit individuals, society, and the environment.

|

|---|---|

| Human-Centred Values Does the system respect the human rights of individuals? |

test |

| Fairness Is the AI inclusive and accessible, and not impacting on any individual or group discriminately? |

test |

| Privacy, Protection and Security Does the system protect privacy and individual security? |

test |

| Reliability and Safety Is the system operating as intended? |

test |

| Transparency and Explainability Can you explain the decision that the system has arrived at? Will you inform people when and how their data is used? |

test |

| Contestability Can someone contest a decision made by the system? |

test |

| Accountability Who will take responsibility for the impacts that the system will have on people? |

test |

Mitigating Risks

The initial risk assessment involves devising strategies that will reduce the impact of the risks that have been identified. Some examples include:

1. Reviewing the quality of the data set:

Bias and incomplete data sets heavily influence discriminatory outcomes – it is important to be wary of systemic practices and how social implication may affect the data (e.g. overrepresentation of Indigenous people in crime) and ensuring the data holistically represents specific categories. Human oversight and judgement is essential.

2. Monitoring for data drift:

Poor model accuracy could be the result of target variables and independent variables changing overtime – it is important to consistently monitor these variables and record them, additionally think about what impact any discrepancies between variables may have.

Project Development

This section provides for the integration of previous stages of ethical consideration into the development of a project by the team members to whom it is allocated.

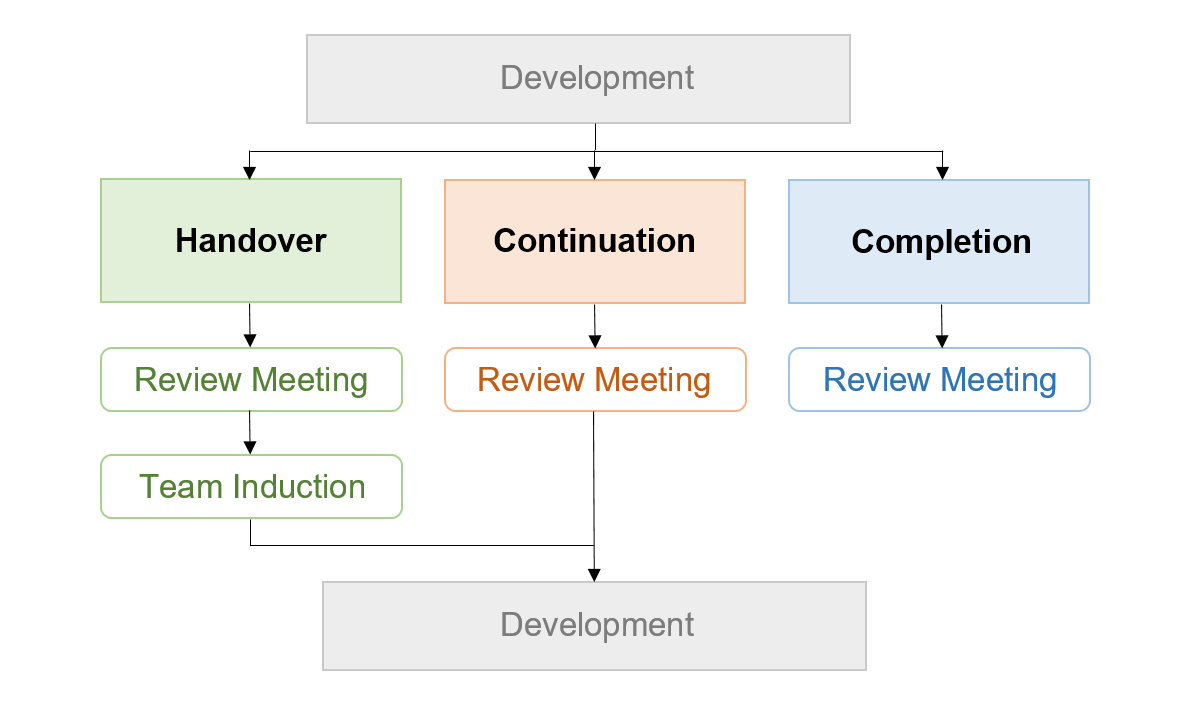

During the development phase of a project, Project Managers and other team members join the Branch Lead in the ethical consideration process. When they are being briefed on the project they have been allocated to, this will involve a team induction in which the Project Manager leads a group discussion on the ethical risks, with reference to the mitigation strategies outlined in the risk assessment tool.

Over the course of the development phase — typically a 12-week semester — each Project Manager will have one 30-minute check-in meeting with the Law & Ethics Committee to discuss how the project is progressing from an ethical perspective. This will take place approximately halfway through the project season, but the Committee is available for ongoing consultation when needed throughout the rest of the development period.

Project Handover, Continuation & Completion

This section sets out a process for handing over or closing out projects that ensures any incoming Branch Leads or Project Managers are equipped to engage with the L&E Framework, and ethical learnings are being built into the design process for subsequent development phases or for other future projects.

When a project season comes to an end, one of three things will happen: a project will be handed over to another MDN team to continue, it continues development, or it will close for the immediate future. Either way, this is an important juncture in which to return to the goals, methods, and values established at its outset. Each Branch will have its own practices for what this looks like in terms of a given project. Here, the focus will be on continuity of ethical considerations and responsibilities.

Handover

Review Meeting

In the lead up to a new project season, the Branch Lead will organise a meeting with a project’s outgoing and incoming Managers (where applicable) and a member of the Law & Ethics Committee.

The purpose of this meeting is to:

- Review and sign off on the project’s Statement of Compatibility

- Discuss how best to address key ethical considerations in pursuit of any new project targets

- Ensure the incoming Project Managers are aware of their responsibilities during the next Development Phase of the project.

Statment Of Compatibility

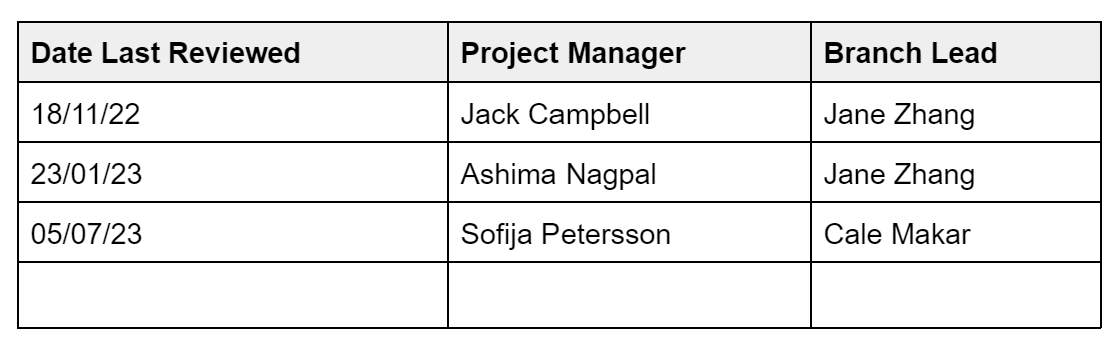

When a project is initially selected for pursuit by MDN, the Branch Lead will complete a ‘Statement of Compatibility’, explaining how the project’s aims will further the Ethical Values of the organisation. This is kept on file and is introduced to each new Manager as the project progresses.

The Statement must be reviewed together during the meeting and signed off on by both the Branch Lead and the incoming or continuing Manager. A Statement of Compatibility for a project that has been running for three seasons will look like this:

Team Induction

When new project team members are being introduced to an ongoing project, it is important that they are included in the discussion around ethical considerations. For new recruits joining their first full project, some grounding in this will be provided during induction and training.

Following the Review Meeting, the new or continuing Project Manager is responsible for leading a discussion with their team about the ethical implications of their work before any development work takes place. The presence of a Law & Ethics Committee member can be requested for this but is not mandatory. The Branch Lead is responsible for ensuring that all technical teams have made time to do this, and this will be the basis of a discussion with the Law & Ethics Committee at the conclusion of the first week of the project season Development Phase.

Continuation

Review Meeting

Where a project is still in development and will not be handed over to another branch for the next project season, a review meeting will still take place but without the need for onboarding an incoming Manager.

The purpose of this meeting is for the Branch Lead, the Project Manager, and a member of the Law & Ethics Committee to:

- Review and update the project’s Statement of Compatibility

- Evaluate how key ethical considerations were addressed over the project lifetime

- Discuss any new key ethical considerations to arise in the future development of the project and how they will be addressed.

Completion

Review Meeting

Where a project is not continuing into the next project season, a review meeting will still take place but without the need for onboarding an incoming Manager.

The purpose of this meeting is for the Branch Lead, the Project Manager, and a member of the Law & Ethics Committee to:

- Review and update the project’s Statement of Compatibility

- Evaluate how key ethical considerations were addressed over the project lifetime.