Welcome

Welcome and congratulations on joining the HPC team. This is the HPC Training Book which contains the training material which will be used to train you in many of the preliminary HPC concepts required to participate in the HPC branch and in MDN as a whole. Throughout this book you will learn the basics of the C programming language, parallel and distributed computing and job batching. You will complete challenges across the weeks to test your knowledge and give you the opportunity to practice your skills.

What is HPC?

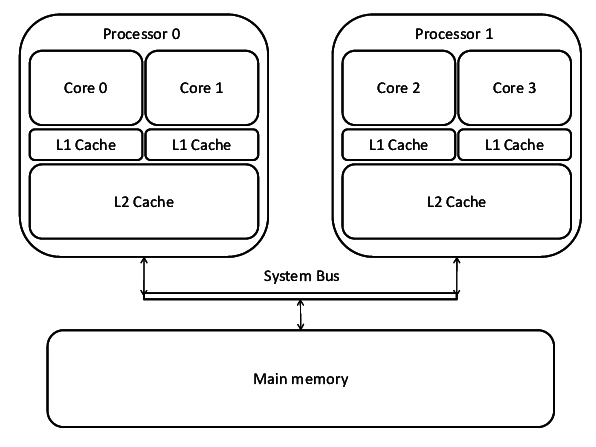

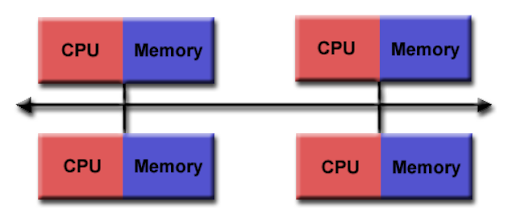

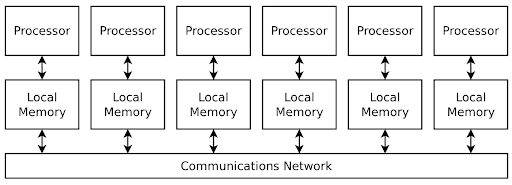

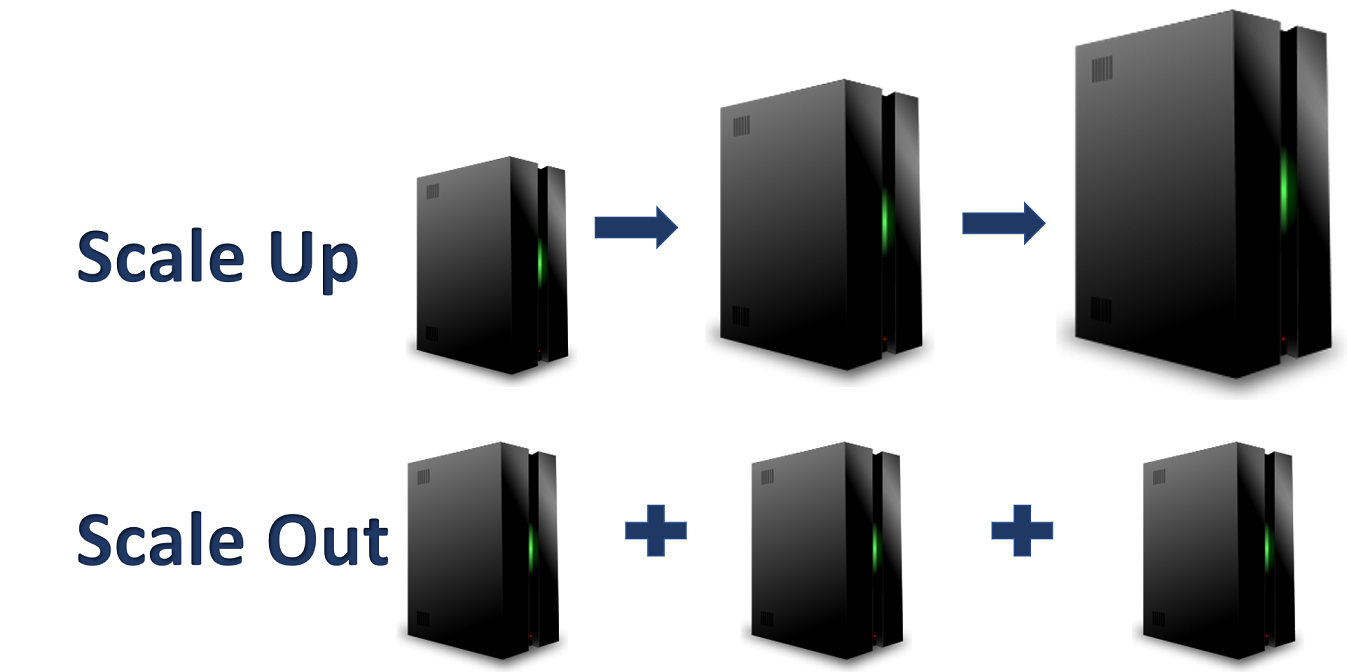

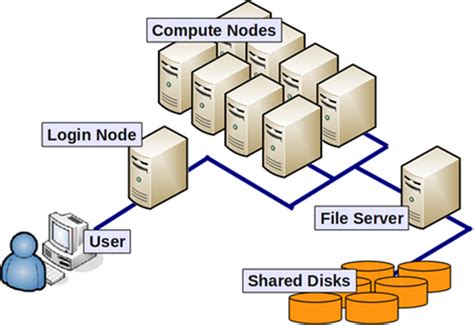

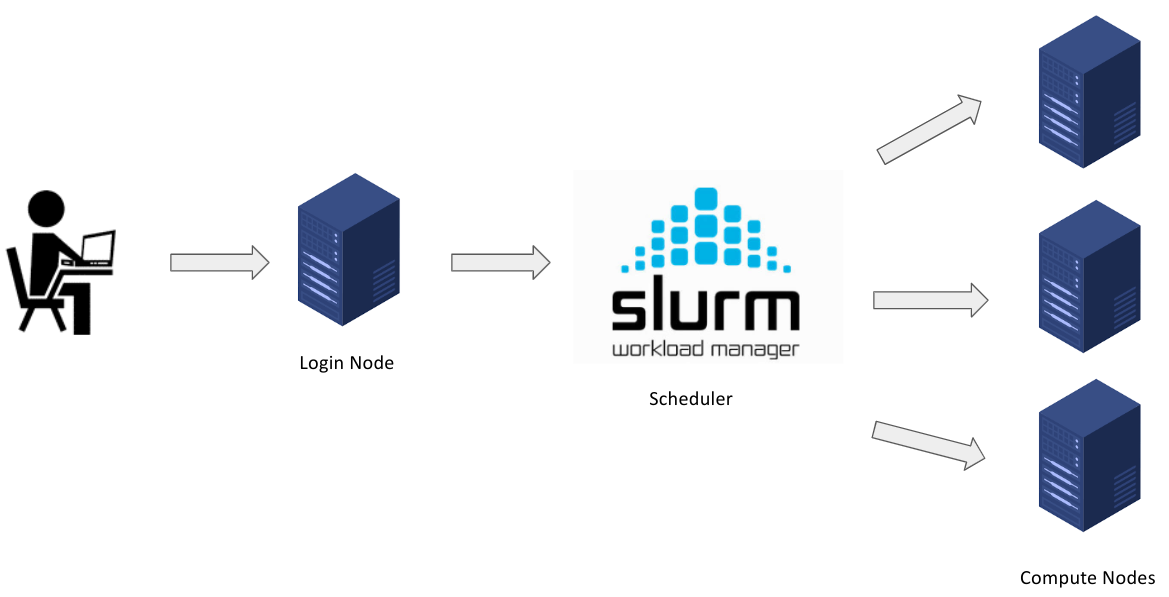

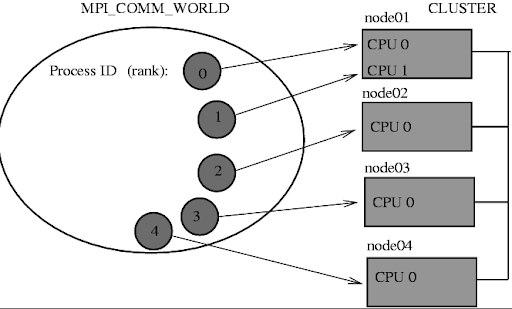

HPC stands for high performance computing. In involves using extremely powerful machines to perform complex tasks. It combines aspects of network management, parallel computing techniques and distributed computers to solve highly complex problems. The main focus of HPC is to build and use computer clusters; collections or grids of powerful computers that can communicate information between each other, to solve advanced computational problems that involve a lot of data or process power and time.

HPC at Monash DeepNeuron

The HPC branch at Monash DeepNeuron works on many different projects. Everything from creating mini clusters, to using supercomputer clusters to simulate complex, real world systems for research and technology development in may different scientific disciplines. Within the HPC branch you will learn a lot about HPC development starting with the foundational concepts and ideas involved in HPC to working on various projects that implement and utilise these techniques in order to solve many different complex problems.

HPC Training

What is all this and what is it for?

This is a book. More specifically it is the book containing all the content, training resources and learning materials that you will be using to complete you HPC training. The purpose of this book is to provide clear and concise learning resources that you can utilise effectively; not just in your HPC training, but for the entirety of your time at Monash DeepNeuron.

What are you going to learn?

During your HPC training, you are going to learn all about HPC concepts and various tools that exist that allow us to perform HPC development. You will start with the setup process which involves downloading some tools you will be using throughout your training as well as creating a GitHub account. You will then learn about the C programming language and to effectively use it to create powerful and fast computer programs. You will then gain access to M3; Monash's cluster environment, where you will learn hwo to distribute jobs across one or many different computers. You'll also learn about concepts involved in parallel and distributed computing and development programs using these techniques to improve the speed of software applications.

Along the way you will learn how to effectively use developer tools like Git and GitHub as well as solving various challenges each week to test and practice what you have learnt in each chapter.

How to use this book

Using the book is pretty self explanatory. The content is split up into chapters which covers a particular topic which can be further broken down sections. You navigate through the book mostly chronologically using the arrow buttons on either side of the page (can't miss them). You can also look through the chapters and sections to find particular topics or using the search bar, which can be activated by pressing S. Each chapter has a challenges section. These contain various tasks to complete related to the content of each chapter.

Contributing

You can contribute to the book by accessing its GitHub repository (GitHub log in the top right hand corner of any page). Follow the contributing guidelines on the repository for more details.

Getting Started

In this chapter you'll setup some essential software tools that you will be using throughout your training. Note that some sections are specific to particular platform and devices. Only complete the section that is specific to your platform. The GitHub section you must complete no matter which platform you are on.

GitHub

Git. What is it?

Git is a Source Control Management tool (SCM). It keeps a history of multiple files and directories in a bundle called a repository. Git tracks changes using save points called commits. Commits use .diff files to track the difference in files between commits. Repositories can have multiple branches allow many different developers to create new changes and fixes to a codebase that are separate from each other. You can also switch between branches to work on many different changes at once. These branches can then later be merged back together to a main branch, integrating the various changes.

What is GitHub?

GitHub is a remote Git service. This allows you to store Git repositories online so that individuals and teams can access and work on Git repositories and projects remotely. It offers many features on top of basic version control such as branch, issue and feature tracking, releases, CI/CD pipelines, project management and more. Its predominately used through its website which offers control of these features through a simple GUI. Throughout your time at Monash DeepNeuron, university and probably for the rest of your career (if in a software based role), you will use service like GitHub to help management the development of projects.

Your first task is to sign up for a GitHub account, if you haven't already. I would highly recommend using a personal email address (not a university one) as you will most likely want access to your account after university.

It is also a good idea to install the GitHub mobile app. This allows you track and manage projects and reply to messages and issues from your phone.

Joining Monash DeepNeuron's GitHub Organisation

Once you have signed up for GitHub, you will need to provide your instructors with your GitHub username. This is so we can invite you as a member of the Monash DeepNeuron's organisation on GitHub. This will give you access to projects and allow you to communicate with other members. This will also allow you to gain specific privileges for your future projects at Monash DeepNeuron. You're invitation to the organisation will be sent via the email used for your account.

Watching Repositories

GitHub allows you 'watch' repositories. This means you'll be notified of changes to the repository so that you can keep on top of is happening with various projects. You'll be using this later in your training.

Download GitHub Mobile

We would also request you install the GitHub mobile app. This can make it easier to login to your account (2FA), interact in discussions, reply to mentions and manage repositories and projects when you aren't at your computer.

Windows Setup

For your training you will need a few tools. Namely:

- Git

- C compiler toolchain (MSVC)

- A text editor (VSCode)

Installing Git

First, you will need to install Git. This allows you to use Git from the terminal and also gives you access to a bash shell environment. While following the install wizard, make sure to select the option that adds Git to your PATH. This important as it allows you to use Git in 'PowerShell'. Keep the other default operations. Git may require you to restart you machine.

Connect GitHub

Once Git has installed, open a new 'Git Bash' that was installed. This can be found in the Windows 'Start' menu. Once it is open, run the following commands, replacing the username and email section with your details (keeping the quotation marks).

git config --global user.name "<github-username>"

git config --global user.email "<github-email>"

Installing MSVC

Next we will need to install a C compiler toolchain. There a many different environments such as CygWin, MinGW but the most ideal environment is Microsoft's official development environment, MSVC. Download the Community Edition of Visual Studio and launch the installer. Under the 'Workloads' tab of the installer select the 'C++ Build Tools' bundle and click install. This may take a while. Once installed (may require restart) open the 'Start' menu and navigate to the 'Visual Studio' folder. There should a variety of different terminal environment applications. This is because Windows has slightly different toolchains and environments for x86 (32-bit) and x86_64 (64-bit). Select the 'Developer Command Prompt for VS 2022' app. In the terminal that spawns, run the command.

cl

This will display the help options for cl, Window's C compiler.

VSCode Installation and Setup

Now that MingW and GCC are installed and setup we will want to setup a text editor so we can easily edit and build our programs. For your training, I recommend using VSCode as it allows you to customize you developer environment to your needs. If you prefer another editor such as Neovim, feel free to use them as you please.

First download VSCode for Windows VSCode Download

Once installed, open the app and navigate to the extensions tab (icon on the left size made of four boxes). Using the search bar, install the following extensions.

- C/C++

- GitLens

- Git Graph

- GitHub Pull Requests and Issues

- Sonarlint

And thats it, you are all setup.

Mac Setup

For your training you will need a few tools. Namely:

- Xcode

- Git

- C compiler toolchain (GCC)

- A text editor (VSCode)

Installing Xcode, Git & GCC

First, we will need Xcode command line tool utilities, to do so, open the 'Terminal' app and run the following command:

xcode-select --install

This will prompt you to accept the install and will download Git and GCC onto your device. To verify installation was successful, run:

$ xcode-select -p

# Should print this

/Library/Developer/CommandLineTools

Note:

Here,

$indicates the prompt of the terminal. Do not include it in the command. This may be a different symbol on your device.

VSCode Installation and Setup

Now that Homebrew, Xcode and GCC are installed and setup we will want to setup a text editor so we can easily edit and build our programs. For your training, I recommend using VSCode as it allows you to customize you developer environment to your needs. If you prefer another editor such as Neovim, feel free to use them as you please.

First download VSCode for Mac VSCode Download

Once installed, open the app and navigate to the extensions tab (icon on the left size made of four boxes). Using the search bar, install the following extensions.

- C/C++

- GitLens

- Git Graph

- GitHub Pull Requests and Issues

- Sonarlint

And thats it, you are all setup.

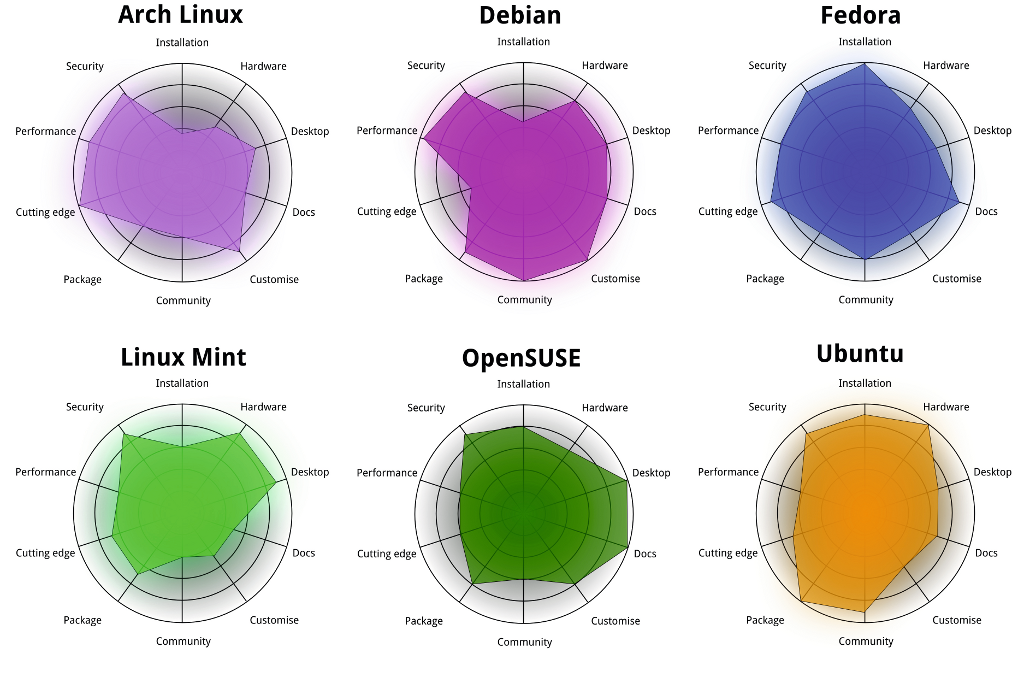

Linux Setup

For your training you will need a few tools. Namely:

- Git

- C compiler toolchain (GCC)

- A text editor (VSCode)

For this section I will be assuming a Debian system, namely Ubuntu. Replace apt commands with your distributions relevant package manager.

Installing Packages

To begin, you will need to install some packages. This will be done using apt, Ubuntu's system package manager. Run the commands in the shell.

# `sudo` represents 'super user do'.

# This runs a command with admin. privileges.

# Update apt's local package index.

sudo apt update

# The `-y` flag means upgrade yes to all.

# This bypasses confirming package upgrades.

# Upgrade packages with new versions

sudo apt upgrade -y

# Installs specified packages (separated by spaces).

sudo apt install git curl wget build-essential

And that's it. Linux is setup and installed.

Connect Git & GitHub

Next we will link your GitHub account to you local Git install. Run the following commands, replacing the username and email section with your details (keeping the quotation marks).

git config --global user.name "<github-username>"

git config --global user.email "<github-email>"

VSCode Installation and Setup

Now that GCC is installed and setup we will want to setup a text editor so we can easily edit and build our programs. For your training, I recommend using VSCode as it allows you to customize you developer environment to your needs. If you prefer another editor such as Vim, Emacs or Neovim, feel free to use them as you please.

First download VSCode for Linux VSCode Download

Once installed, open the app and navigate to the extensions tab (icon on the left size made of four boxes). Using the search bar, install the following extensions.

- C/C++

- GitLens

- Git Graph

- GitHub Pull Requests and Issues

- Sonarlint

And thats it, you are all setup.

WSL Setup

For your training you will need a few tools. Namely:

- Windows Terminal

- Windows Subsystem for Linux

- Installing Ubuntu

- Git

- C compiler toolchain (GCC)

- A text editor (VSCode)

This section will cover how to install WSL on Windows. If you already have a WSL setup you can skip these steps.

Installing Windows Terminal

The first thing you will need is the new Windows Terminal app. This makes it easier to have multiple shells open, even different shells. This will be used to access your WSL instance.

Note:

Shells are an environments that allow access to the operating system (OS), hardware and other system processes through simple shell commands, usually a shell language like Bash or Powershell.

Installing and Setting Up WSL

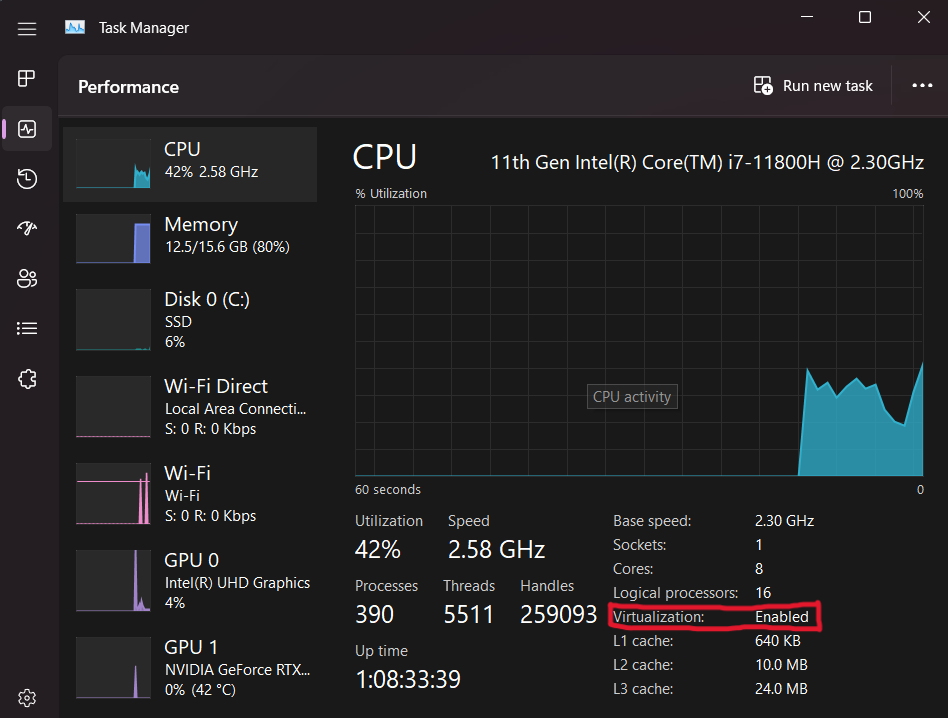

Before you begin make sure to update Windows to make sure you have the latest version. You will then need to check in order to see if you can install WSL is if virtualization is enabled for your device. You can check this by opening 'Task Manager', clicking on the performance tab and looking at the CPU details. There will be an option called 'Virtualization' which should say 'Enabled'.

Next, open Powershell with Administrative Privileges and run the following command.

wsl --install -d Ubuntu-22.04

Once this has finished, you may need to restart your machine. With that WSL is installed. You should be able to open a Ubuntu shell using Windows Terminal by clicking the arrow icon next to the default tab and selecting Ubuntu. On your first launch, this may require you to setup your user credentials.

Installing Packages

To begin, you will need to install some packages. This will be done using apt, Ubuntu's system package manager. Run the commands in the shell.

# `sudo` represents 'super user do'.

# This runs a command with admin. privileges.

# Update apt's local package index.

sudo apt update

# The `-y` flag means upgrade yes to all.

# This bypasses confirming package upgrades.

# Upgrade packages with new versions

sudo apt upgrade -y

# Installs specified packages (separated by spaces).

sudo apt install git curl wget ca-certificates build-essential

And that's it. WSL is setup and installed.

Connect Git & GitHub

Next we will link your GitHub account to you local Git install. Run the following commands, replacing the username and email section with your details (keeping the quotation marks).

git config --global user.name "<github-username>"

git config --global user.email "<github-email>"

VSCode Installation and Setup

Now that WSL, Ubuntu and Git are installed and setup we will want to setup a text editor so we can easily edit and build our programs. For usage with WSL ans your training in general I recommend using VSCode as it allows you to customize you developer environment to your needs. It also can access the WSL filesystem allowing you to work on projects in a native Linux environment on Windows. If you prefer another editor such as Vim, Emacs or Neovim, feel free to use them as you please.

First download VSCode for Windows VSCode Download

Once installed, open the app (on the Windows side) and navigate to the extensions tab (icon on the left size made of four boxes). Using the search bar, install the following extensions.

- C/C++

- GitLens

- Git Graph

- GitHub Pull Requests and Issues

- Sonarlint

- Remote development

- WSL

- Remote SSH

You can then navigate to the window with the Ubuntu shell from before and run:

code .

This will installed VSCode on the WSL from your Windows version and open it in the home directory. And thats it, you are all setup.

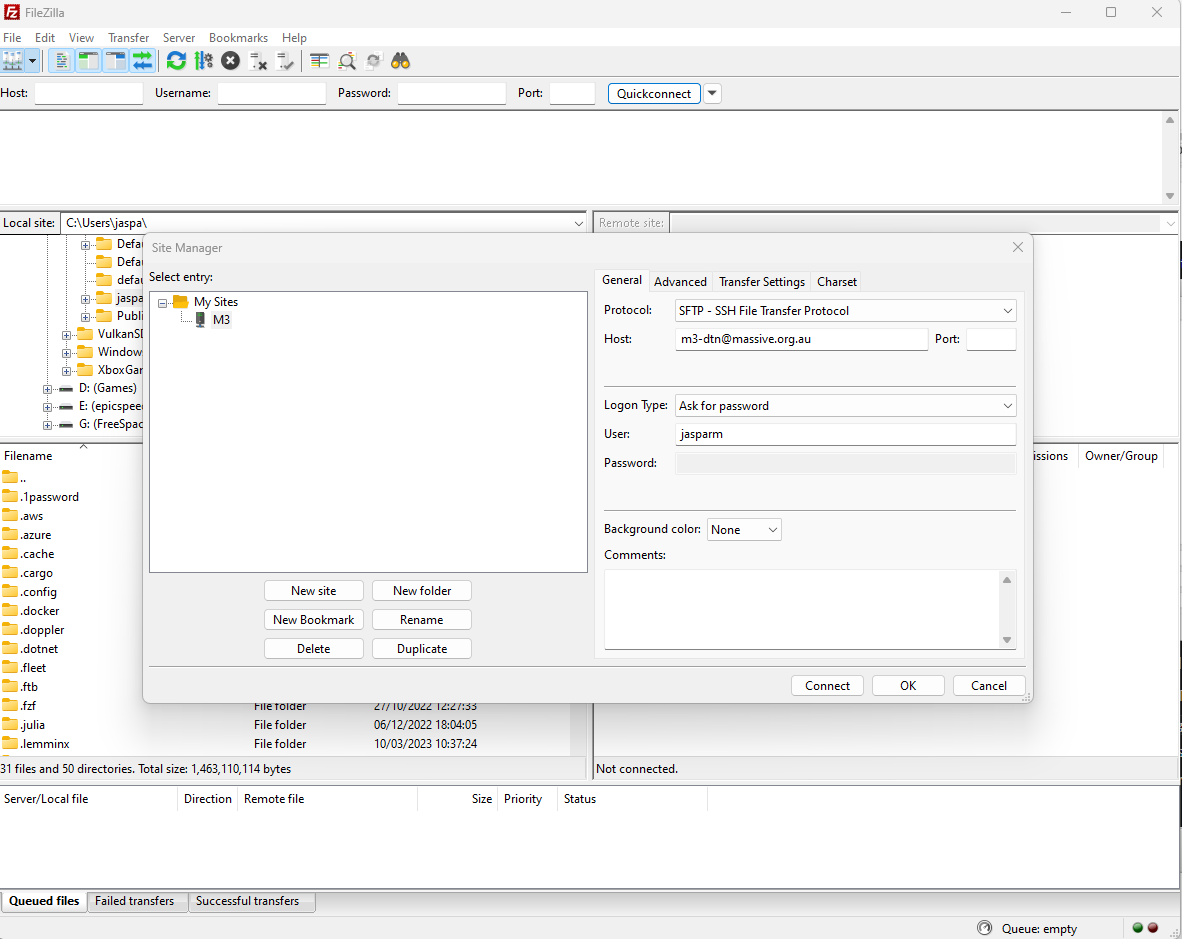

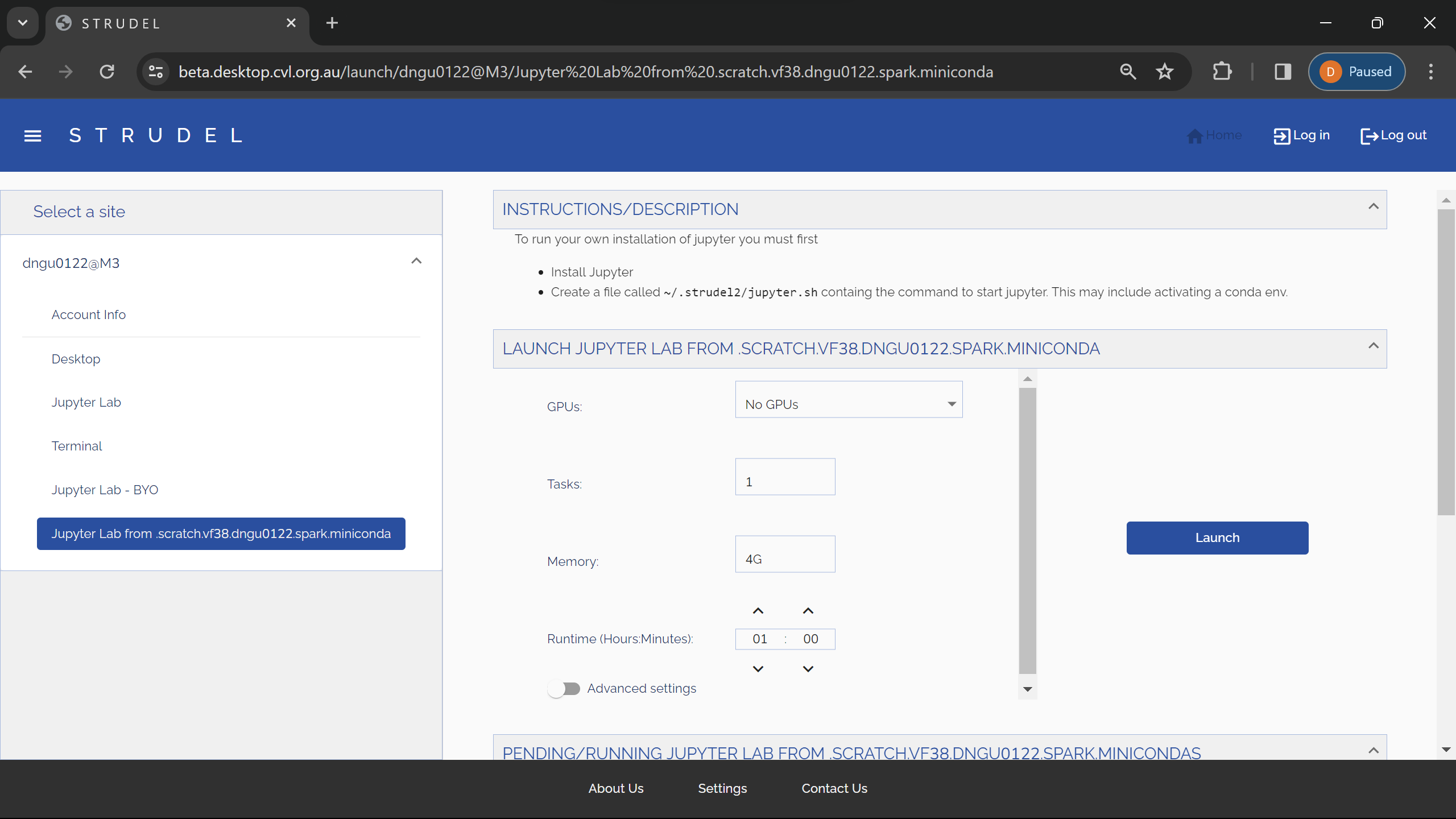

M3 MASSIVE

MASSIVE (Multi-modal Australian ScienceS Imaging and Visualisation Environment) is a HPC supercomputing cluster that you will have access to as an MDN member. In this page we will set you up with access before you learn how to use it in Chapter 5. Feel free to go through the docs to learn about the hardware config of M3 (3rd version of MASSIVE) and it's institutional governance.

Request an account

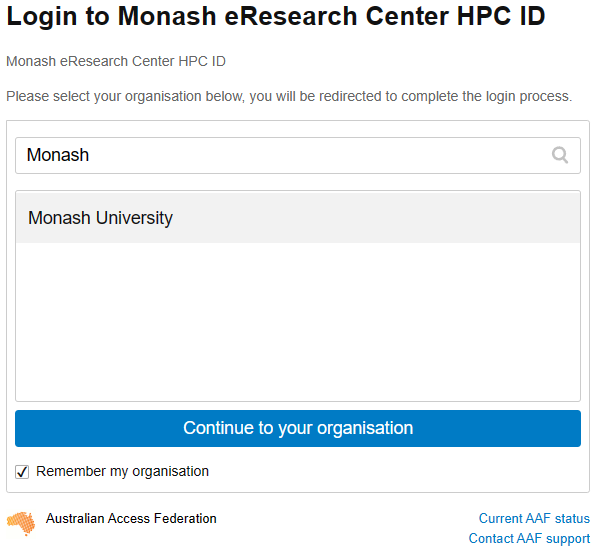

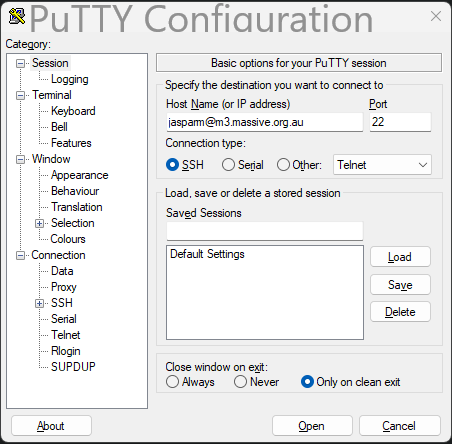

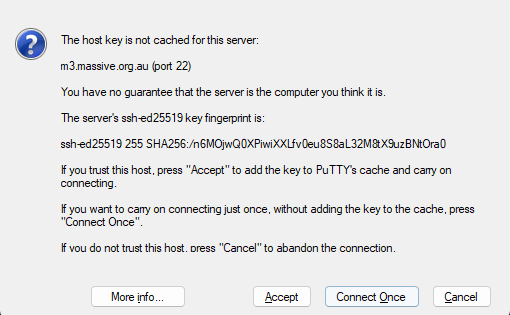

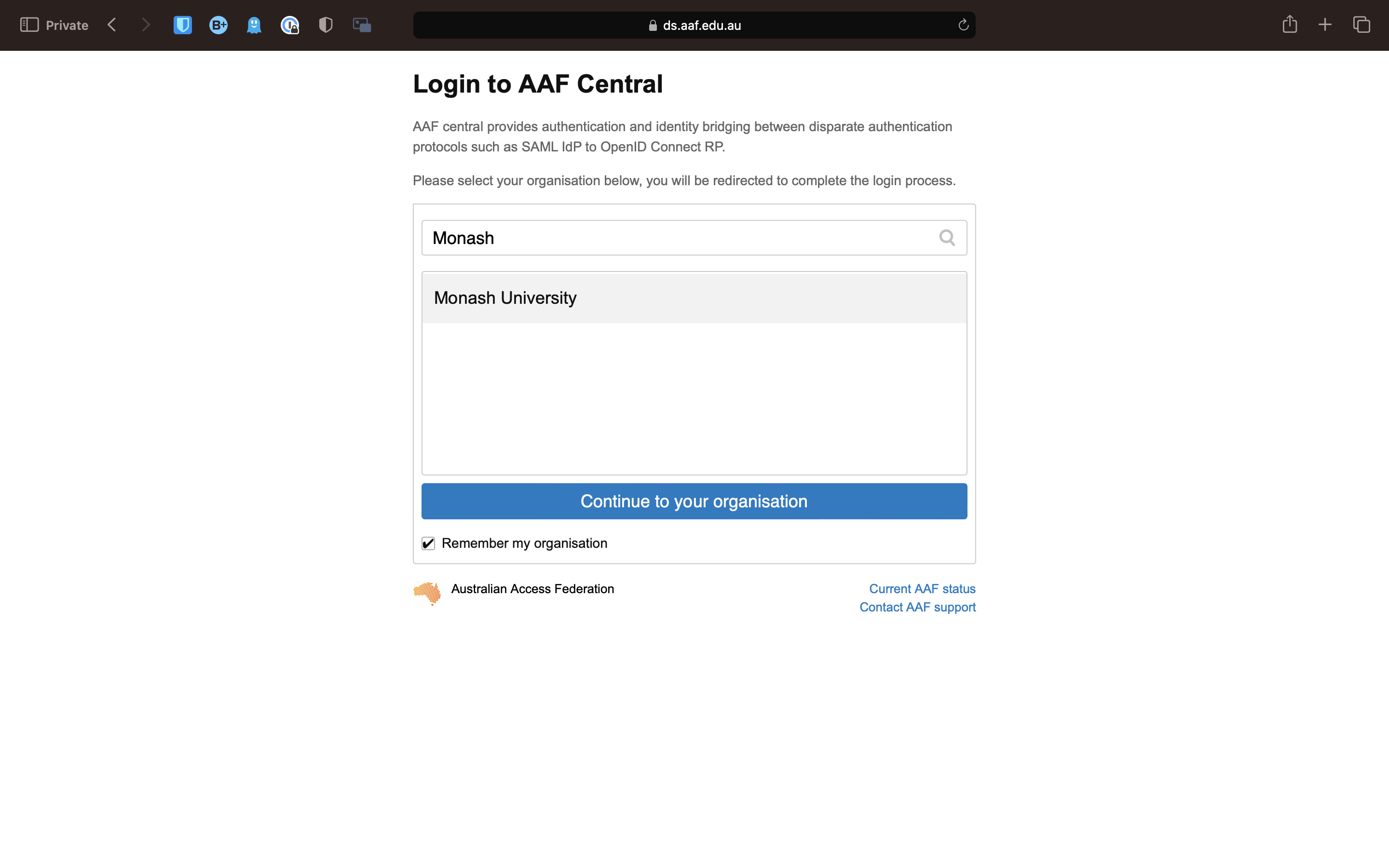

In order to access M3, you will need to request an account. To do this, follow this link: HPC ID. This should take you to a page this this:

Type in Monash, as you can see here. Select Monash University, and tick the Remember my organisation box down the bottom. Once you continue to your organisation, it will take you to the Monash Uni SSO login page. You will need to login with your Monash credentials.

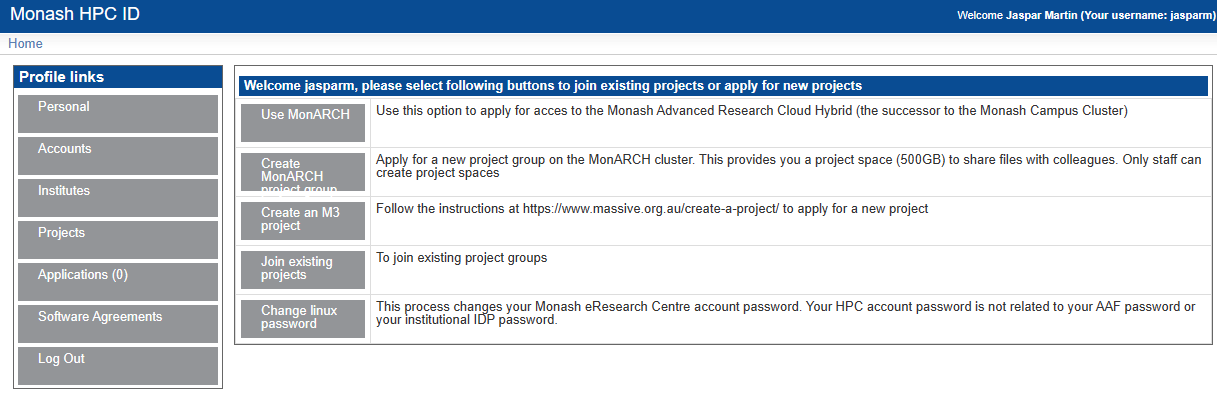

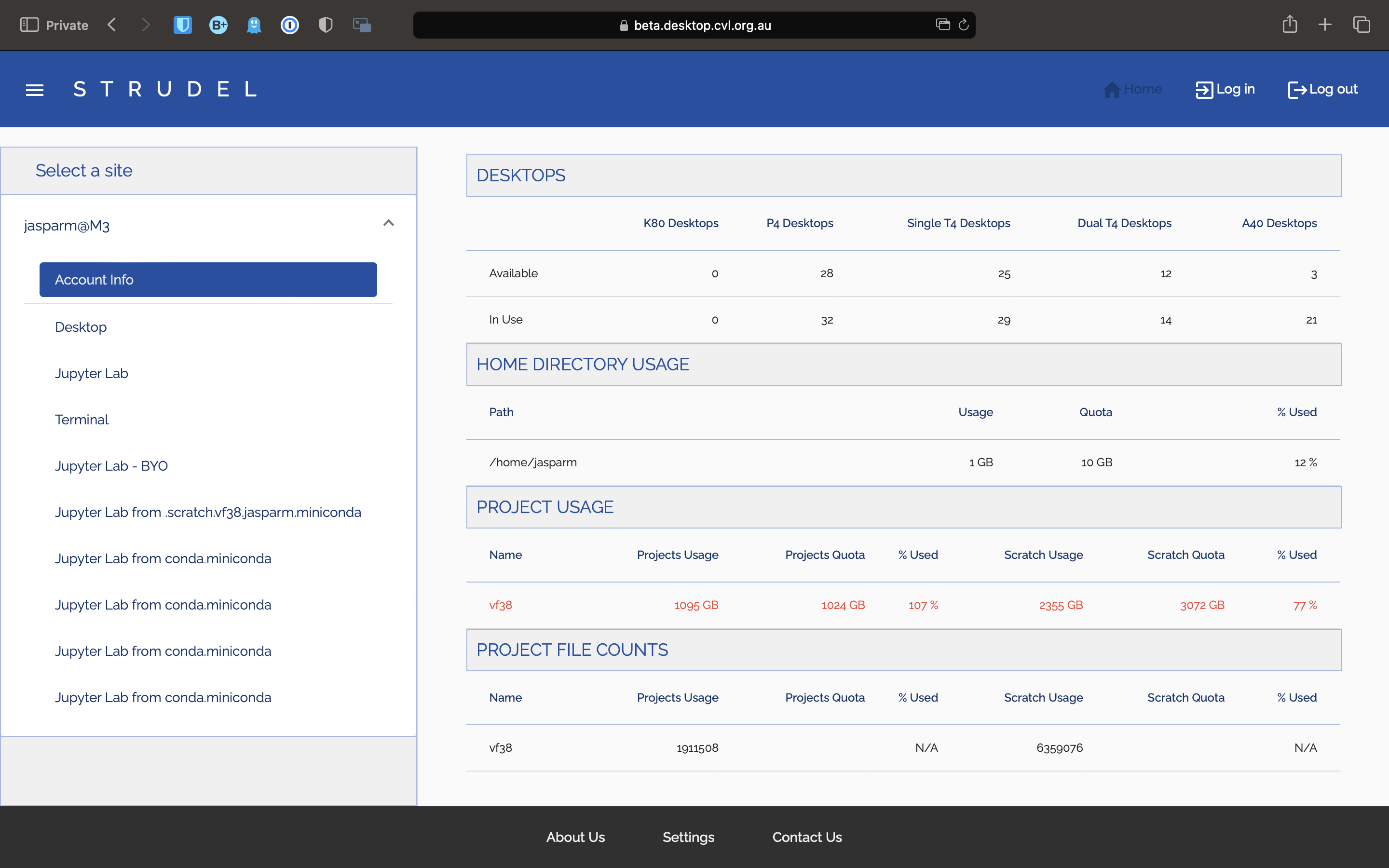

You should now see something like this:

Once you are here, there are a couple things you will need to do. The first, and most important is to set your HPC password. This is the password you will use to login to M3. To do this, go to home, then click on Change Linux Password. This will take you through the steps of setting your password.

Once you have done this, you can move on to requesting access to the MDN project and getting access to gurobi.

Add to project

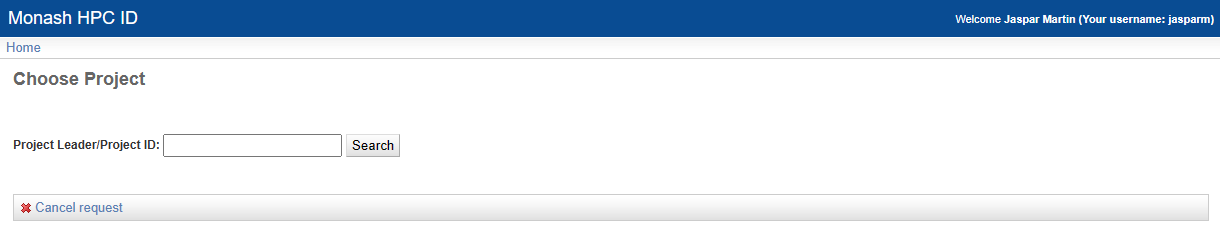

To request to join the MDN project, again from the Home page click on Join Exiting Project. You should see a screen like this:

In the text box type vf38 and click search. This is the project code for MDN. Then select the project and click submit. You will now have to wait for the project admins to approve your request. Once they have done this, you will be able to access the project. This should not take longer than a few days, and you will get an email telling you when you have access.

Once you have access to everything, you are ready to get started with M3. Good job!!

Git SSH setup

In order to reliably clone git repos in M3, in particular private ones, it is best practice to use SSH cloning. This is a bit more complicated to set up, but once it is done, it is much more streamlined. There are few steps involved. First, you will need to generate an SSH key on M3. Login to M3, and run the following command:

ssh-keygen -t ed25519 -C "your_email@example.com"

This will then prompt you to enter a file location. Just press enter to use the default location. It will then ask you to enter a passphrase. This is optional, but recommended.

Once you have generated your key, you need to add it to the ssh agent. Do this by running:

eval "$(ssh-agent -s)"

ssh-add ~/.ssh/id_ed25519

You will then need to copy the public key to your clipboard. You can do this by running:

cat ~/.ssh/id_ed25519.pub

Then, go to your github account, go to settings, and click on the SSH and GPG keys tab. Click on New SSH key, and paste the key into the box. Give it a name, and click Add SSH key.

You should now be able to clone repos using SSH. To do this, go to the repo you want to clone, but instead of copying the HTTP link, copy the SSH link, and then its regular git cloning.

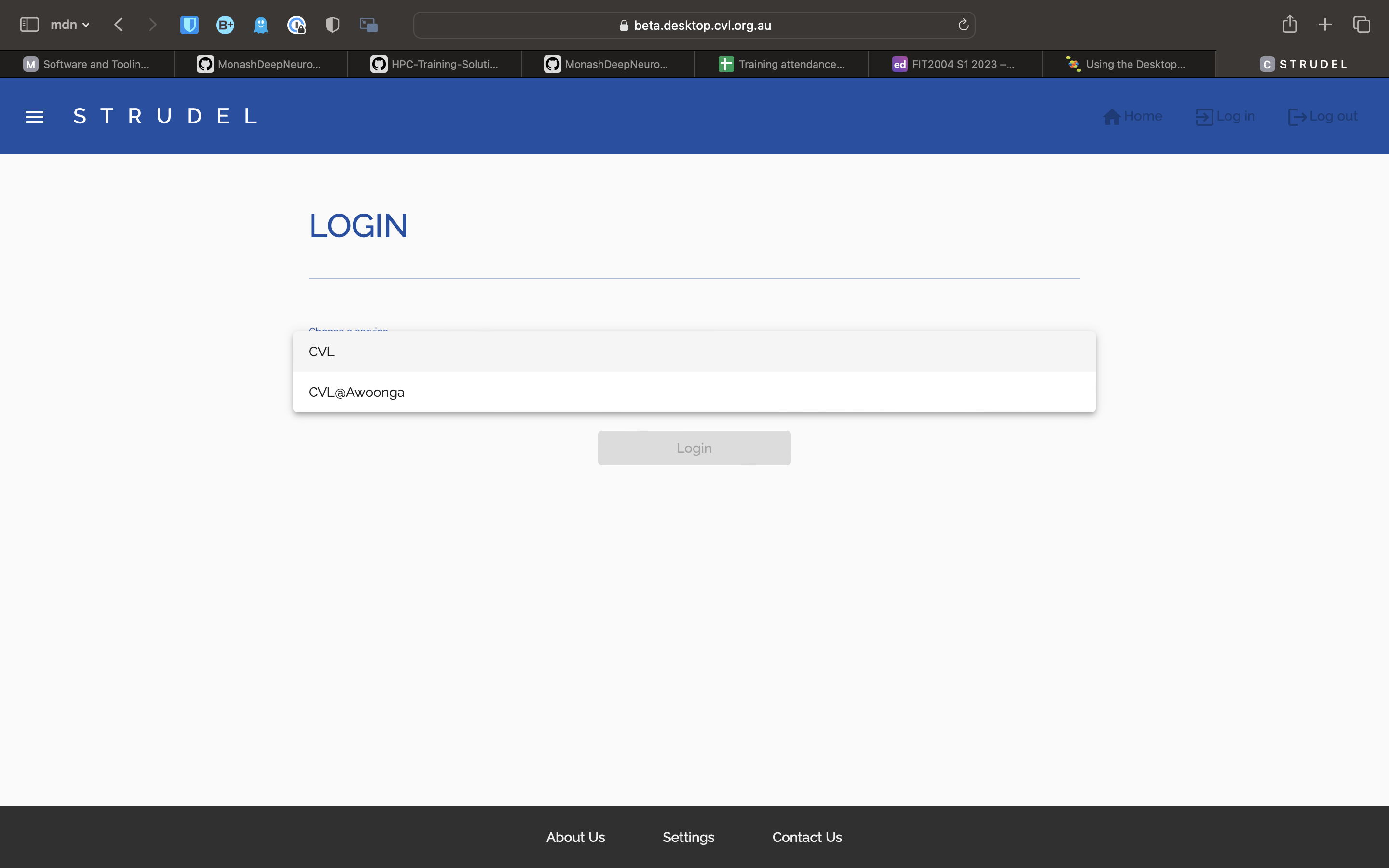

Nectar Cloud

The ARDC Nectar Research Cloud (Nectar) is Australia’s national research cloud, specifically designed for research computing. Like with M3, we will set you up with access now before you learn about it in later chapters. This webpage explains what it is if you're curious.

Connect Monash Account to Nectar Cloud

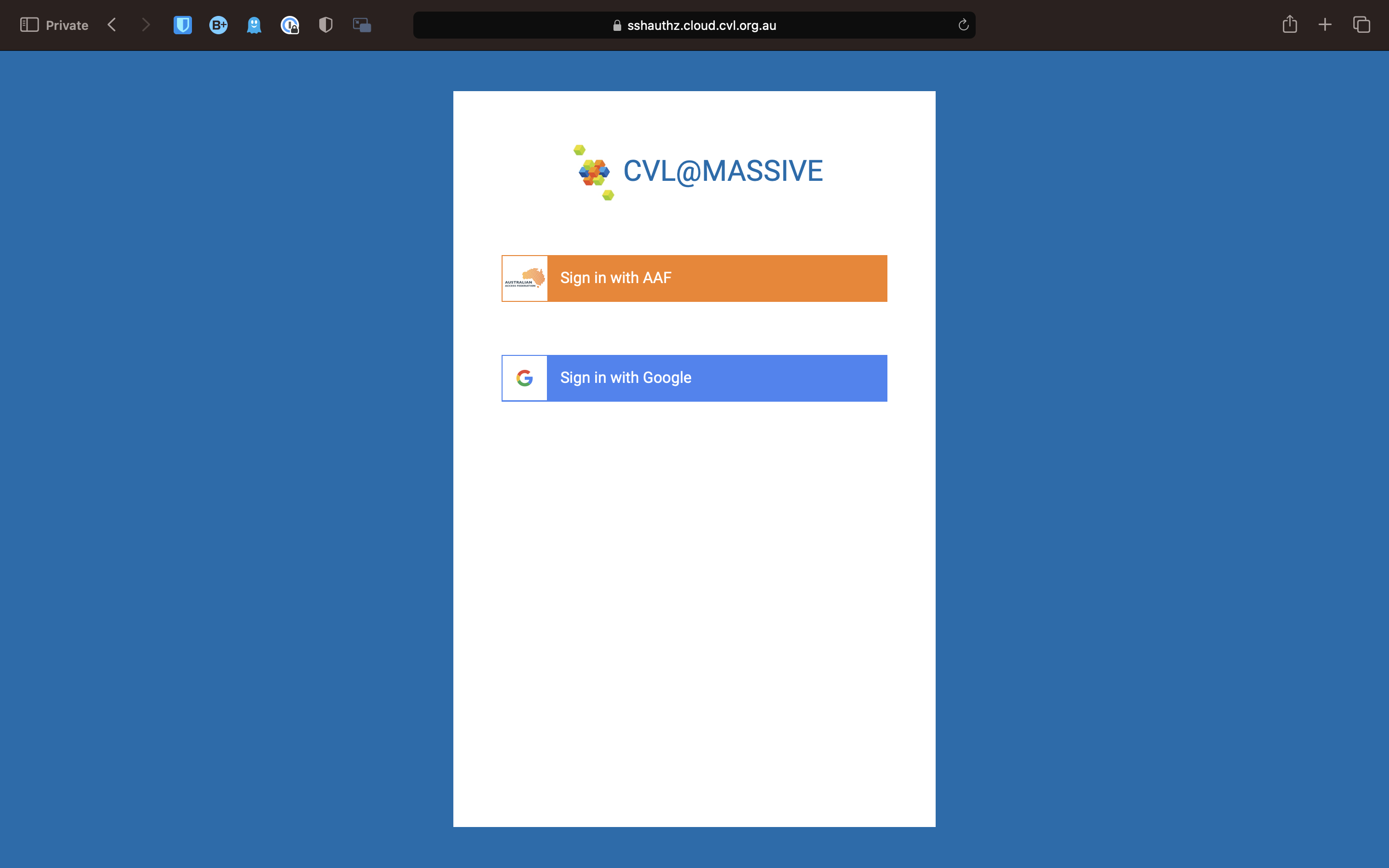

To create an identity (account) in Nectar Cloud, all you have to do is login using your Monash student account. Click this link to access Nectar's landing page.

You will see the following. Make sure to click "Login via AAF (Australia)".

You will be redirected to enter your Monash credentials after which you will see the Nectar Cloud dashboard for your trial project (your project name will be pt-xxxxx).

Cloud Starter Series

ARDC has provided this cloud starter tutorial series for people new to Nectar Cloud. You should be able to follow these tutorials using your trial project. If you need more SUs (service units aka. cloud credits) in order to provision more cloud resources for MDN-related work, you should message your HPC Lead with that request.

Challenges

As you progress through each chapter you will be given a small set of challenges to complete. In order to complete the challenges there is a complementary GitHub template repository found on the Monash DeepNeuron GitHub organisation called HPC Training Challenges. As a template you are able to create your own copy of the repository and complete the challenges completely independent of the other recruits.

Setting Up Challenges Repository

To get setup:

- Click the link above to go to the repository on GitHub.

- Click 'Use this template' button (green) and select 'Create a new repository'.

- Give it a name and make sure it is private.

- Ensure you are copying it to your personal account and not the Monash DeepNeuron organisation. There will be a dropdown next to where you give the repo a name from which you can select your account.

- Click 'Create repository from template'.

- This will open the page for the repository. Click the '<> Code' button (green), make sure you are in the HTTPS tab and copy the link.

- Open a terminal in your dev directory.

- Run:

# Clone to your machine

git clone <repo-link>

# Enter clone's directory

cd <repo-name>

# Create link to template called 'upstream'

git remote add upstream https://github.com/MonashDeepNeuron/HPC-Training-Challenges.git

# Disable pushing to template

git remote set-url --push upstream DISABLE

# Sync with 'upstream'

git fetch upstream

# Merge 'upstream' main branch with your main

git merge upstream/main --allow-unrelated-histories

# Open repository in VSCode

code .

This will clone the repository into you current working directory maintaining its link to its origin (your remote copy on GitHub) allowing you to sync changes between you local and remote copies. This also sets up a link called upstream to the original template directory with pushing disabled. This allows you to sync the base of the repository with your copy, similar to a fork but prevents changes from being pushed to the template.

Once you completed a challenge or made some changes you want to save to your remote repository you can simply add to a commit stage, combine the changes in a commit and then push the commit to origin.

git add . # Add any untracked or modified files

git commit -m "msg" # Commit changes locally with a message

git push origin # Push to your GitHub repository

If you need to sync your local repository with the remote version you can either fetch the changes to add them to the logs without modifying the codebase or pull them to integrate the changes into your version.

git fetch origin # Sync changes with remote without integrating (downloading) them

git pull origin # Sync and integrate remote changes locally

In order to sync your copy of the challenges repository with the remote template you must re-fetch the changes from upstream and then merge the upstream remote with your local repository.

git merge upstream/main --allow-unrelated-histories

Note: Look at the README.md of the repo for the for more instructions.

Challenges Repository

The challenges repository is broken down into different directories for each chapter. For each chapter their will be a series of additional directories corresponding to the specific challenge. These will contain any and all the resources needed for the challenge except programs that you are required to complete.

When you want to attempt a challenge it is good practice to create a branch. This allows you to make changes to the codebase that do not affect other branches each with their own history. You can then later merge branches to integrate changes together. To create a new branch you can use the git-branch command or the the -b flag with the git-checkout command giving both the name of the new branch. This will branch from your current position in the repositories history. Switching branches is achieved using the git-checkout command (with no -b flag). You use the regular git-add, git-commit and git-push commands interact and save the changes that only affect this new branch.

git branch <branch-name> # Create new branch

git checkout <branch-name> # Checkout to the new branch

# or

git checkout -b <branch-name> # Checkout to a new branch

For your training. I would recommend creating a new branch for every challenge you attempt and merging them with the main (default) branch once you are done. This allows you to make modifications to each of your attempts independent of each other as well as make it easier to resync with the template repository should anything change at its base. it also allows you to get some meaningful practice with Git which is one of the most used developer tools in the world.

When you want to merge your changes, checkout back to the main branch and run a merge request. This will pull the changes from the deviating branch into main and update it accordingly.

# On your deviant branch

git fetch origin

git checkout main

git fetch origin

git merge <branch-name>

Note: Merging sometimes creates merge conflicts. This happens when the two branches histories cannot automatically merge. Git has many tools to assist resolving these conflicts and there is a plethora of resources online to assist you. If you get stuck do not hesitate to reach out.

Brief Introduction to C

What is C?

What is C? You may have heard of a something called C in your classes or online but may be unaware of what it is and what it is used for. Simply put C is a general purpose programming language developed at Bell Labs in 1972 by Dennis Ritchie. It was designed to closely reflect the architecture and capabilities of the target device. It was popularized in large part due to its usage and role in the UNIX operating system. Before languages like C, developers and engineers had to mostly use assembly languages; the instruction code that was specific to every single device (CPU in particular), meaning an application for one device would have to be rewritten in the new devices assembly language. C aimed to make this easier, creating a unified language that could then be compiled to any target architecture. The 'write once, compile everywhere' philosophy. This dramatically increased the capabilities of developers to create portable applications that were also easier to write.

Design

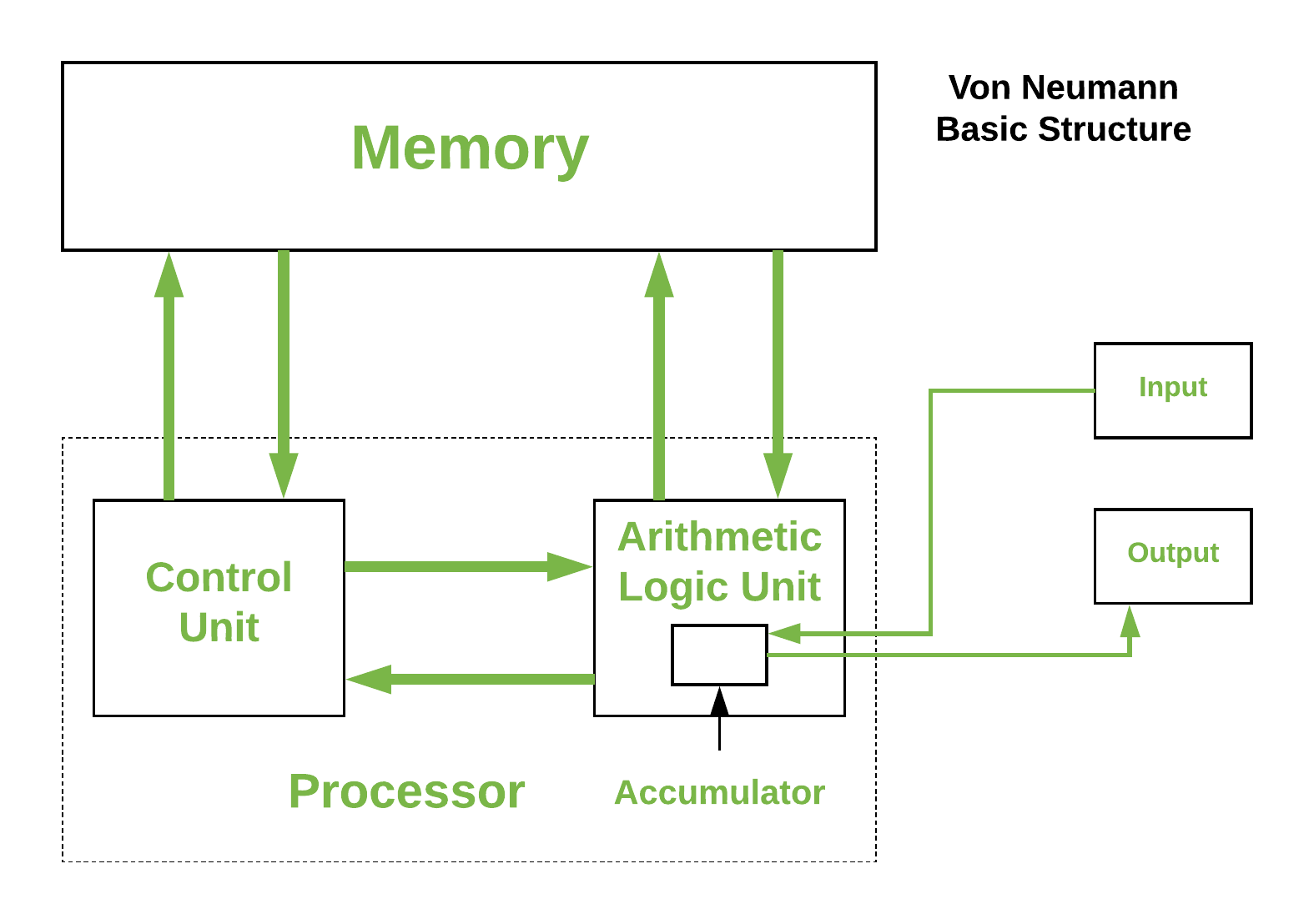

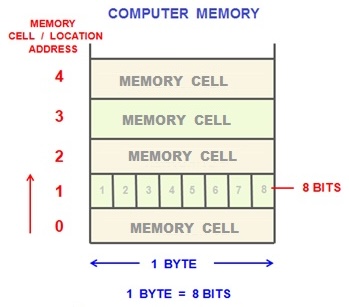

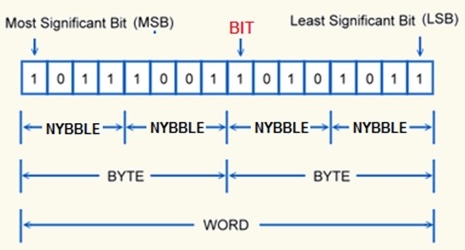

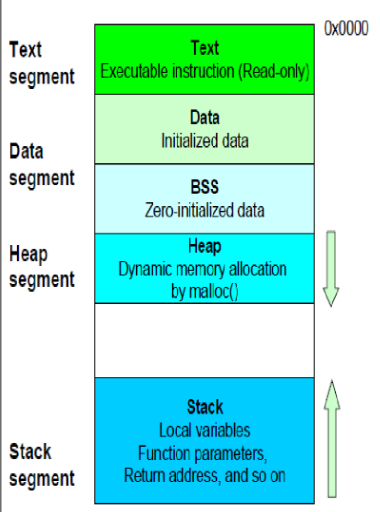

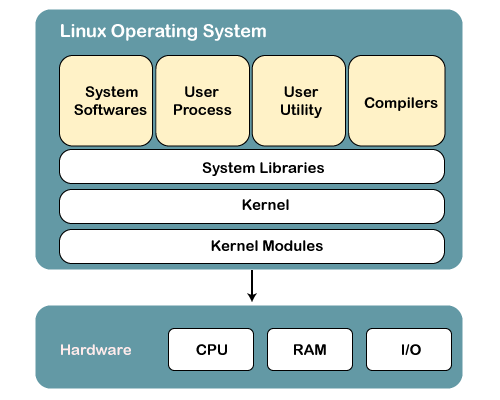

C is a very simple programming language. It has relatively minimal syntax, a small standard library and only a few primitive data types and entities. C's power comes from its simplicity, it allows developers to utilise any and all components of a computer in any way the developer sees fit. This is because C is still able to target various system-level operations such as allocate memory and make system calls. This capability is derive from C originating as the language that was used to create the UNIX operating system, the predecessor of Linux and MacOS. C and UNIX were developed simultaneously meaning any operation they needed UNIX to perform had to be accessible from C. C also has a very simple memory model that closely reflects how computer memory is designed today which follows Alan Turing's original description of a Turing machine ie. memory is an infinitely (not truly infinite, but for arguments sake) long tape of individually addressable cells.

Technical Description

C is a static and weakly typed language. What are types? Types are in essence a form of structure, typically dictated by their layout ie. their size in memory. Every language has type system which dictates the operations that can be performed on a particular types and the semantics for when these operations can occur. A statically typed language means that the compiler must know the type of every piece of data in a program. This is because data has a fixed width in C meaning any program written in C must have a known size such that the it can actually run on a machine. Weakly typed describes a language for which data types are allowed to have implicit conversions. This means that you can utilise the same data but in a different shape. This is sometimes useful but more often is a pitfall to the language.

Family History

While many people will talk about the C family of languages, many of the execution techniques used in C were inspired by another language called ALGOL developed in the late 50's. Many of the principles in ALGOL were using in C. See is also the successor to the B programming language (also developed at Bell Labs by Ken Thompson and Dennis Ritchie). C has inspired almost every procedural language used today and has had a massive influence on other language families. Some of the descendants of the C language include C++, Swift, JavaScript, PHP, Rust, HolyC, Java, Go, C#, Perl and (depending who you ask) Python.

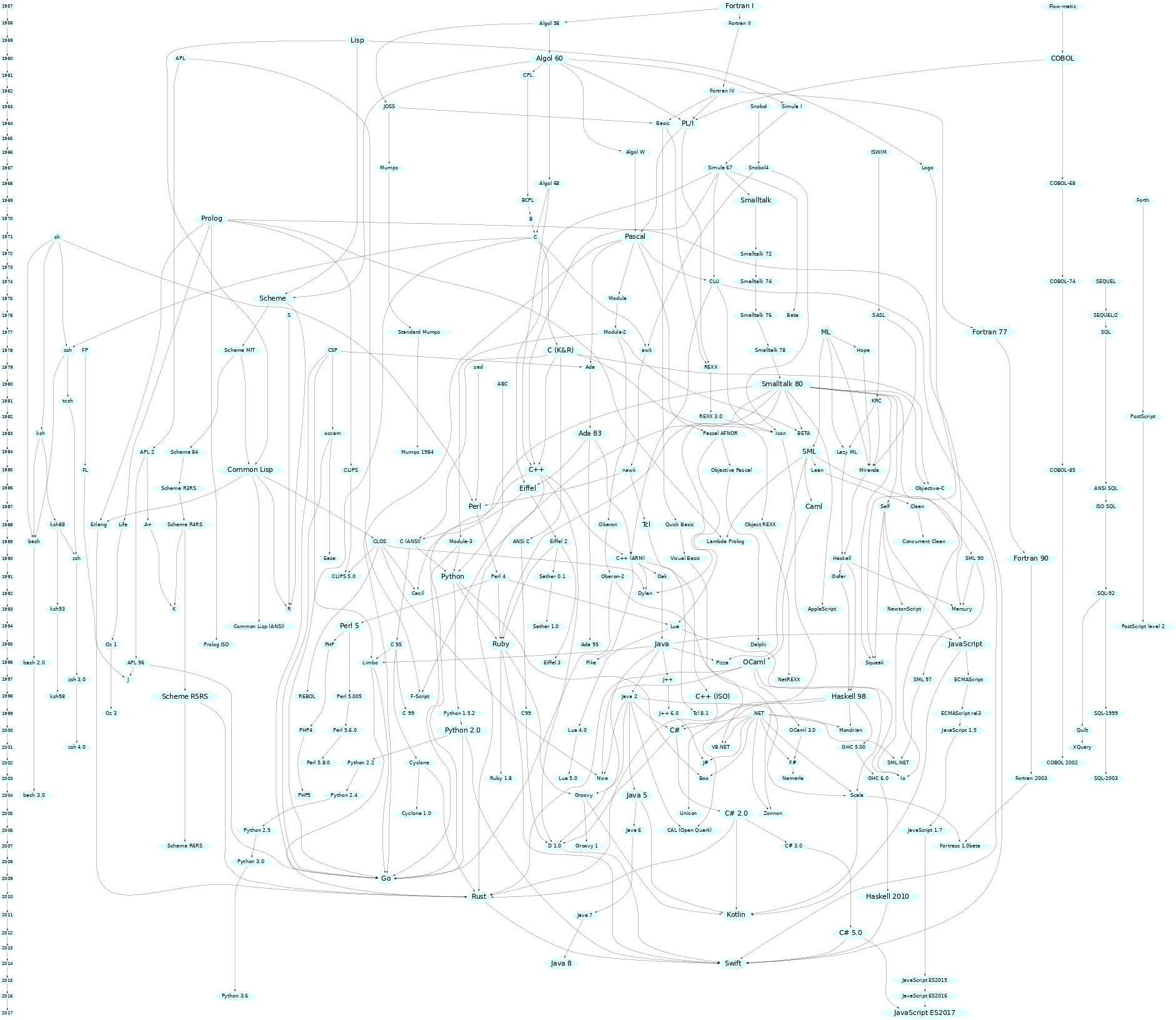

Entire (mostly) Programming Language Lineage

- Source rigaux.org

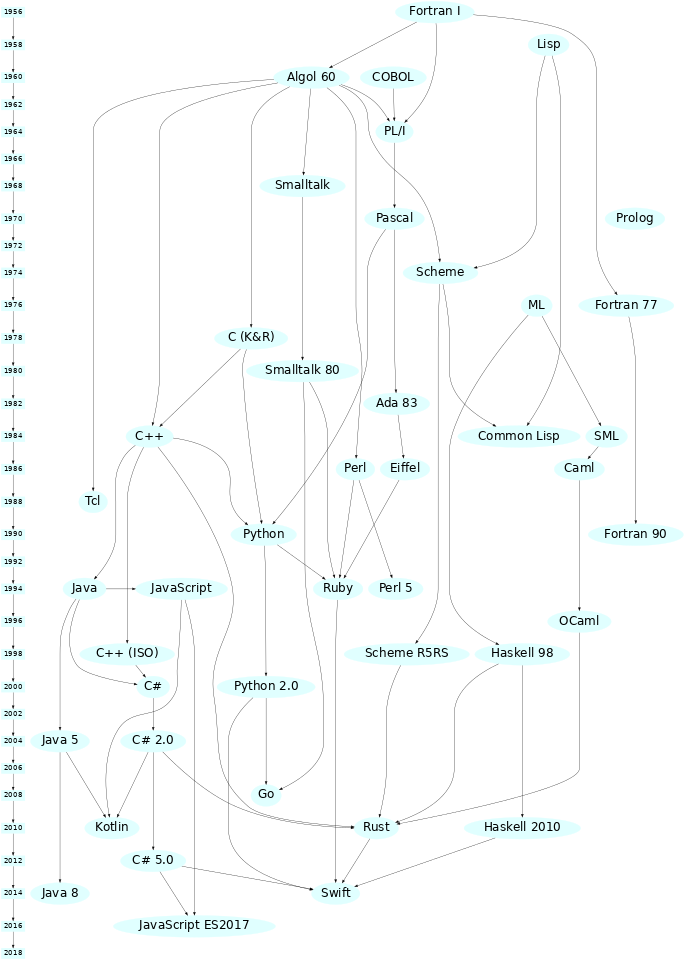

Simplified Programming Language Lineage

- Source rigaux.org

Hello World

If you have ever programmed before you may be familiar with 'Hello World'. If not, a 'Hello World' program is often the first program you write in a programming language. It serves a brief introduction to the languages syntax, a simple workflow in that language and also as a sanity check to ensure the developer tools for the project were installed and setup correctly.

Fun Fact:

The 'Hello World' test program was popularized by Brian Kernighan's Programming in C: A Tutorial.

Setup

To begin open a new terminal window. If you don't already have a development directory it is a good idea to create one. This should be where you host all your projects.

# Optional

mkdir dev

cd dev

In this directory (or another of your choosing) we are going to create a directory for our 'Hello World' project. Finally, we'll open VSCode in this location so that we can easily edit our code.

mkdir hello

cd hello

code .

Note:

Windows users will have to use the Developer Command Prompt used in the Windows section of the last chapter. You will then need to navigate to a different directory. Ideally use your 'Home' directory.

cd C:\Users\<username>You should be able to follow the instructions above normally.

Compiling and Running

'Hello World' in C is quite simple, although not as simple as say Python. First create a new file called main.c.

#include <stdio.h>

int main()

{

puts("Hello World\n");

return 0;

}

To compile and run the program simply run the commands below. The output will be an executable called main (main.exe on Windows) located in the same directory as the source file.

Linux, MacOS & WSL

gcc -o main main.c

./main

Hello World

Windows

cl main.c

main.exe

# ...

Hello World

What's Going On Here?

Let's break down the 'Hello World' program a bit to see what is going on.

#include <stdio.h>

On the first line we have we have included a library. This is the Standard IO library. Libraries come in the form of one or more headers, denoted by the *.h file extension. More on headers in the next section. The #include is called a preprocessor directive. It is resolved at compile time meaning it does not exist at runtime. Here #include will copy (yes, literally copy) the entire contents of the file specified. In this case, it is the file stdio.h. This means that the symbols from this header are now available in our program.

int main()

{

/// ...

return 0;

}

main() is a function. Functions are subroutines that allow us to run a piece of code many time without repeating it everywhere we need it. In C, the main() function has a special meaning, it is the entry point of the application. After some initial setup from the OS, this is first section of the application to run. Here main() takes no arguments however, sometimes it will take two special arguments which are used to communicate information from the outside world to the program at the point of execution. Here, we indicate main() returns an int. The return value is used by the OS to handle specific errors that may occur. A return value of 0 from main indicate success with any non-zero value indicating an error.

puts("Hello World!");

puts() is another function. It was obtained from the stdio library we included before. puts() means 'put string'. It takes a null-terminating string as input and returns the op-code indicating success or failure. As a side effect,puts() writes the string to the stdout file-stream ie. outputs the string to the terminal and appends a newline character at the end. Both the call to puts() and return end in a semicolon. This indicates the end of the line/expression and is required for almost every line of C.

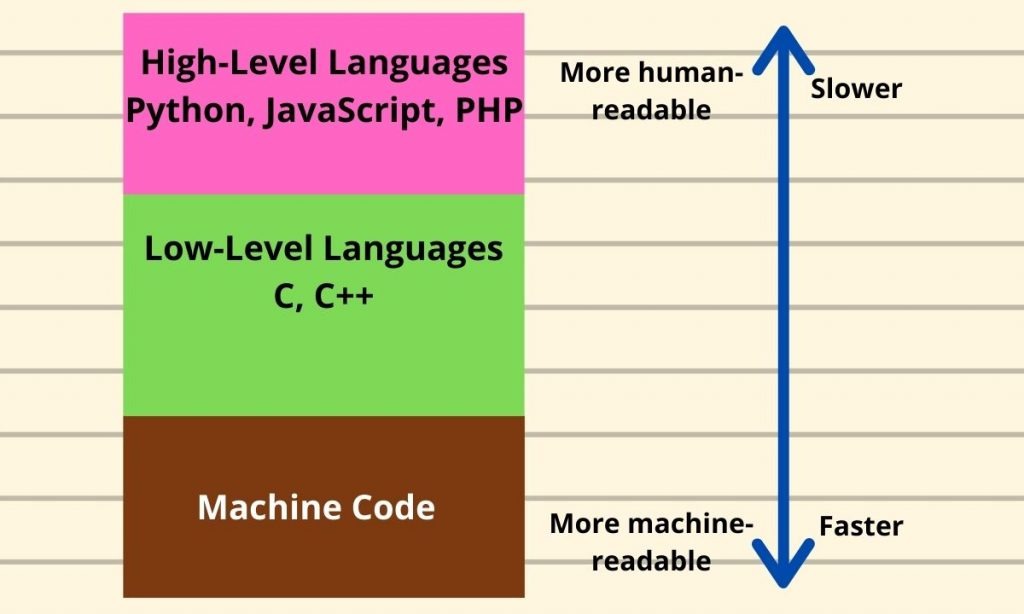

Compilation

C is a compiled language. This means that all the source code is compiled to machine code before the application is run. This means that the compiler needs to know a lot about a program before it can run. Compilation is the process of combining source files into a single binary and because C is a structured language, the order in which files are compiled is important. C also sometimes requires files to be linked together. This occurs when two source files depend on a common library source file.

Header & Source Files

In C there are two kinds of files that are used. Source files and header files. These must be combined appropriately by the compiler in order to produce a working executable.

Header File

Headers are declaration files. They define and state that certain symbols (functions, types etc.) exist such that other headers and source files can use them without knowing there full definition (how they work) yet. Within a header, you define what is called the signature of a function or type. This allows the C type system to validate the uses of the symbol before it even compiles the symbol. Headers in C use the *.h file extension.

Source Files

Source files are the core of any language. They hold the definition of every symbol defined in a codebase. Source files for C use the *.c file extension. Source files are what actually get compiled binary machine code. Source files are often compiled into object files first and then linked together using a linker when they depend on each other or external libraries.

Compiling & Linking

There are four main steps in the compilation pipeline for C programs.

- Pre-process - The Preprocessor is invoked, which includes headers in source files and expands macros. Comments are also stripped at this step. The step creates the Translation Unit (TU) for a source file.

- Compilation - The TU's for each source file is then compiled individually into assembly code. During this step the Abstract Syntax Tree is created for the program and is lower to an Higher Intermediate Representation (HIR). Generally this is created in assembly language of the target platform/CPU.

- Assembly - This step involves lowering the HIR once again into an Intermediate Representation (IR) ie. it assembles the assembly code into an binary object file.

- Linking - This is the final step. Once objects files are created for each TU, we can link them together as well as link any external libraries to form an executable (or binary library file). Linking provides information to an executable about to file the definition of symbols at runtime so that functions will actually execute the correct code. Before this step, the object files just new that certain symbols existed but not where to find them.

Types & Variables

Fundamental Data Types

In C there are six fundamental data types, bool, char, int, float, double and void. There are also a variety of modified integral and floating point types to allow for different sizes.

bool- Boolean type, can either betrueorfalse. It's width is 8-bits (1 byte).char- Character type. Can be unsigned or signed. Represents any ASCII character value. Usually has a width of 8-bits (1 byte).short intorshort- Number type, can be signed or unsigned. Only represents whole number values. Usually has a width of 16-bits (2 bytes).int- Number type, can be signed or unsigned. Only represents whole number values. Usually has a width of 32-bits (4 bytes).long intorlong- Number type, can be signed or unsigned. Only represents whole number values. Sometimes has a width of 32-bits (4 bytes) or 64-bits (8 bytes).long long intorlong long- Number type, can be signed or unsigned. Only represents whole number values. Has a width of 64-bits (8 bytes).float- Single precision floating point type, represents decimal number values. Usually has a width of 32-bits (4 bytes).double- Double precision floating point type, represents decimal number values. Usually has a width of 64-bits (8 bytes).long double- Extended double precision (or quadruple precision) floating point type, represents decimal number values. Usually has a width of at least 64-bits (8 bytes) but sometimes has a width of 128-bits (16 bytes).void- Incomplete data type. Indicates the absence of a type of value.

Note:

bool,charandint(and sized variants) are defined as integral types ie. they are all number types.

Variables

Variables are integral to computer programming. Variables are objects that own a piece of data. What data or rather value of a variable can change throughout the lifetime of a program. To declare a variable in C, we first declare its type. The type predicates which operations are valid for that variable as well as tells the compiler the size of the variable. We then git it a name and assign it an initial value.

/// General syntax: type name = value

int a = 10;

In C variables have 'value semantics', this means that the data of a variable is always copied. For the example above, the data representing the literal 10 is copied into the location of a by the assignment operator (=).

Note: Often the compiler will likely try to construct variables (like

a) directly to the evaluated value of the right-hand-side of the=ie. constructadirectly from10rather than createawith a dummy value and then copy10toa's location. This is called copy elision or return value optimization.

You can also create new variables and initialize them to the value of an existing variables using the same syntax. Because C uses value semantics, b now has its own copy of the data owned by a. These can now be modified independently of each other.

int a = 10;

int b = a;

Note:

- Literals are data with a constant value that are predefined. They are often used to initialise variables to a particular starting value.

- A

charliteral is a single letter surrounded in single quotes eg.'a'is a literal for the letter 'a'.

Constant Data

Sometimes you want data to be constant or immutable. This is data that does not and cannot change during its lifetime. To do this in C we use the const qualifier before the type of a variable. This marks some variable as constant. Constant data must be given an initialiser or the program will not compile. const can be applied to any variable of any type but a constant variable cannot be modified to be mutable however, you can create a copy of a constant variable that is mutable.

const int a = 4;

Static Data

In C you can also allows you to create data that will exist for the entire lifetime of the program and is declared with the static keyword before the type initialiser. This kind of data is said to have static storage duration which means that it remains 'alive' or valid for the entire duration of the program and will not automatically get cleaned up when the it has left scope. This has some interesting implications but the most useful is its usage in function blocks. Static variables allow data to persist between function calls, meaning that if you invoke a function that owns a static variable; say an int, was left with the value 9 once the called had completed, if you were to recall the function and inspect the value the static variable it would still contain the value 9 at the start of the call. This allows you to keep data between function calls.

static int a = 9;

There are other more advanced usages of static that allow you to control the linkage of different translation units (source and object files) but they are beyond the scope of this book.

Operators

Operators are the most primitive way to manipulate data and variables in C. There are four major categories for operators these being arithmetic, bitwise, logical and assignment. Each operator is written in either infix (binary), prefix or prefix (unary) form. Most operators return the result of their evaluation meaning it can can be assigned to a new variable however, some modify the data in-place, this includes all assignment operators and the increment and decrement operators (which do both).

Note: Parenthesis are used to control the order of execution, separating sub-expressions.

Arithmetic Operators

Arithmetic operators work for integral and floating point type. They are the most common type of operator used in C.

| Operator | Name | Description | Example |

|---|---|---|---|

+ | Addition | Adds two values | a + b |

- | Subtraction | Subtracts two values | a - b |

* | Multiplication | Multiplies two values | a * b |

/ | Division | Divides two values | a / b |

% | Modulo Division | Modulo divides two values ie. returns the remainder of the division of two numbers | a % b |

++ | Prefix Increment | Increment the value in-place and return new value | ++a |

-- | Prefix Decrement | Decrement the value in-place and return new value | --a |

++ | Postfix Increment | Increment the value in-place and return old value | a++ |

-- | Postfix Decrement | Decrement the value in-place and return old value | a-- |

+ | Posigation | Set sign of value to positive | +a |

- | Negation | Set sign of value to negative | -a |

Notes:

- Binary arithmetic operators will a return value whose type is the larger of

aorb.- If

aorbis smaller than its counterpart, the smaller will be implicitly promoted to a larger type.- Division between two integral types performs integer division.

- Division between a floating point type and any other type (integral or floating point) performs floating point division by implicitly promoting the integral or smaller argument to an adequate type or size.

- Modulo division does not exist for floating point types.

Bitwise Operators

Bitwise operators are used to manipulate the individual bits of an integral type allowing precise control of the most fundamental data type.

| Operator | Name | Description | Example |

|---|---|---|---|

~ | Complement | Inverts the bits of a values | ~a |

& | And | Ands the bits of two values | a & b |

| | Or | Ors the bits of two values | a | b |

^ | Exclusive Or (Xor) | Xors the bits of two values | a ^ b |

<< | Left Shift | Shifts the bits of a to the left by b positions. | a << b |

>> | Right Shift | Shifts the bits of a to the right by b positions. | a >> b |

Note:

- Bitwise operators do not exist for floating point types.

- Bits are lost from shift operators.

- Left shift (

<<) pads with zeros ie. adds a zero in the new empty position.- Right shift (

>>) pads with the most significant bit ie. the new empty position is filled with the same value as the previous occupant.- Left and right shifts are formally described respectively as: \(a << b ≡ a * 2^{b} mod(2^{N})\) and \(a >> b ≡ \frac{a}{2^{b}} mod(2^{N})\) where \(N\) is the numbers bits in the resulting value.

Logical Operators

Logical operators operate on Boolean expressions statements. They only evaluate to another Boolean expression (ie. type bool).

| Operator | Name | Description | Example |

|---|---|---|---|

! | Not | Negates the Boolean. | !a |

&& | Logical And | Both a and b must be true. | a && b |

|| | Logical Or | Either a or b must be true. | a || b |

== | Equals | a is equal to b. | a == b |

!= | Not Equal | a is not equal to b. | a != b |

< | Less | a is less than b. | a < b |

> | Greater | a is greater than b. | a > b |

<= | Less than or equal | a is less than or equal to b. | a <= b |

>= | Greater than or equal | a is greater than or equal to b. | a >= b |

Assignment Operators

Assignment operators will perform a binary operation between two values and assign the result to the left argument (excluding =).

| Operator | Name | Description | Example |

|---|---|---|---|

= | Assign | Assigns the value of b to a | a = b |

+= | Add Assign | Assigns the value of a + b to a | a += b |

-= | Subtract Assign | Assigns the value of a - b to a | a -= b |

*= | Multiply Assign | Assigns the value of a * b to a | a *= b |

/= | Divide Assign | Assigns the value of a / b to a | a /= b |

%= | Modulo Divide Assign | Assigns the value of a % b to a | a %= b |

&= | And Assign | Assigns the value of a & b to a | a &= b |

|= | Or Assign | Assigns the value of a | b to a | a |= b |

^= | Xor Assign | Assigns the value of a ^ b to a | a ^= b |

<<= | Left Shift Assign | Assigns the value of a << b to a | a <<= b |

>>= | Right Shift Assign | Assigns the value of a >> b to a | a >>= b |

The result of any expression containing operators can be assigned to a new or existing variable by simply using the expression as the right argument of =.

/// The value of a is the result of the expression.

double a = (6 + 7) / (5.0 * 4); ///< a == 0.650000

sizeof

There is also one final operator called the sizeof operator which returns the number of bytes a particular piece of data occupies in memory. The sizeof operator uses a function call syntax with the argument being the data to be queried.

int a = 4;

double b = 3.154;

int sz_a = sizeof(a); //< 4

int sz_b = sizeof(b); //< 8

Enumerations

The last data type we will look at is the enum. Enums are another integral data type however, they have a limited number of possible states where each state is named by the user. For example consider a Boolean type Bool; although a builtin type can be represented by a enum with its possibles states being False and True. The states or enumerators of an enum are integral constants ie. each name has a particular integer value associated with it. Using the Bool example again, the value of False could be 0 and the value of true could be 1. This would restrict a Bool to only being True or False (1 or 0).

enum Bool { False = 0, True = 1 };

Enums in C can be named (like Bool) or unnamed where the variants are simply generated as named integral constants (similar to just creating constant variables for each variants). Enum variants are accessible as long as the enum is in scope meaning I could use say False anywhere in the program that Bool is in scope without having to express in the language directly that False comes from Bool. The enumerators of an enum always have an underlying type of int meaning they can be used like constant integer value due to C's weak type system. Enumerators will always start with a value of 0 if no value is specified and increase for each subsequent variant however, it is possible to specify any value for variants as long as they are unique.

Type Casting

Often it is useful to be able to convert data of one type to another. This can be done via type casting. This involve prefixing a variable (or function call return) with the desired type you want to cast to surrounded in parenthesis. This will cast the bits of the current variable to the new type which can then be save to a variable of the appropriate type or passed to a functions expecting that type.

#include <stdio.h>

int main()

{

int i = 97;

printf("i = %d\n", i);

char ic = (char)i; //< Cast to char

printf("i = ic = '%c'\n", ic);

return 0;

}

Printing

We're going to briefly discuss how to print stuff to the terminal so you can start writing some C.

printf

Earlier we saw the puts() function which prints strings to the terminal. This function is really good for strings but does not work for any other data type. Instead, there is the printf() function which prints formatted text to the terminal. This allows you to print different data types to the terminal and control various aspects of the output.

Signature

The general signature of printf() is quite unique in C and how it achieves it is a bit of compiler magic in order to actually implement but you do not have to worry about it. printf() takes as its first argument a string that represents the output format of the printed text. The next argument is the .... This is called the ellipsis and it is used to represent a variable number of untyped function arguments. This allows you to pass ass many arguments as you want to printf() and it will print them all as long as there are an equivalent number of positional arguments in the format string. The variadic arguments are inserted in output string in the same order they are passed to printf() ie. there is now way to indicate in the format string which variadic argument to use at any given positional argument. The positional argument introducer character is the % followed by a modifier to indicate in incoming type.

printf(char* fmtstring, ...);

Note:

- Ignore the use of

char*for now.printf()is different toputs()in that it doesn't pad the end if the output string with a newline so you will have to manually provide it. The newline character is'\n'. The backslash is a special character that indicates the proceeding character is "escaped". Escaped characters have special meanings for string and character data. If the format string doesn't have any positional arguments thenprintf()will just print the string likeputs().printf()is not able to print data of any kind without a format string ie.printf(10)would fail to compile.

Example

The following simple code snippet creates to variables num and dec and computes their sum. It then prints a string according to the format "%d + %f = %f", substituting num, dec and sum respectively.

#include <stdio.h>

int main()

{

int num = 4;

double dec = 3.54756;

double sum = num + dec;

printf("%d + %f = %f", num, dec, sum);

return 0;

}

Question: Notice how we used

doublefor the type ofsum. What would happen ifsumtype wasint?

If you want to have a play with printf(), copy the following code snippet run it on your own device. The command line will output different varieties of 'Hello World!'.

#include <stdio.h>

int main() {

printf("%30s\n", "Hello World!"); // Padding added

printf("%40s%10s%20s%15s\n", "Hell", "o", "World", "!");

printf("%10.7s\n", "Hello World!"); // Print only the first 7 characters with padding

printf("%100c%c%c%c%c %c%c%c%c%c%c%c\n",

72, 101, 108, 108, 111, 32, 87, 111, 114, 108, 100, 33, '\n'); // Hex values

return 0;

}

Formatting Specification

You'll notice we used a different character after the % for each argument. This is because printf() needs to know the type of the incoming arguments so that it can format the string appropriately. For example floating point types have to use a decimal point when transformed into a text format while integers do not.

C has a concise language for printf() format arguments with the general format for a positional argument specifier being:

_%\[flag\]\[width\]\[.precision\]\[length\]type-specifier_

There are a variety of different options for each part of the specification. Below is a series of tables breaking down the various options for each sub-specifier but note that only type-specifier is needed, the others are optional.

Type Specifiers

| Type Specifier | Type | Example |

|---|---|---|

% | Two sequential % will result in a single % being printed. | % |

d or i | Signed Decimal Integer | 392 |

u | Unsigned Decimal Integer | 7235 |

o | Unsigned Octal Integer | 610 |

x or X | Unsigned Hexadecimal Integer (X: uppercase variant) | 7fa or 7FA |

f or F | Decimal Floating Point (F: uppercase variant for special numbers eg. nan vs NAN) | 392.65 |

e or E | Scientific Notation (mantissa and exponent) (E: uppercase variant) | 3.9265e+2 or 3.9265E+2 |

g or G | Use the shortest representation: %e or %f (G: uses uppercase variants) | 7fa or 7Fa |

a or A | Hexadecimal Floating Point (A: uppercase variant) | 7fa or 7Fa |

c | Character | a |

s | String | example |

p | Pointer Address | 0x7ffce531691c |

n | Prints nothing. The argument corresponding to this specifier must be pointer to a signed integer. Stores the number of character written so far. |

Flags

| Flag | Description |

|---|---|

- | Left-justify within the given field width; Right justification is the default (see width sub-specifier). |

+ | Forces to preceed the result with a plus or minus sign (+ or -) even for positive numbers. By default, only negative numbers are preceded with a - sign. |

| space | If no sign is going to be written, a blank space is inserted before the value. |

# | Used with o, x or X specifiers the value is preceded with 0, 0x or 0X respectively for values different than zero. Used with a, A, e, E, f, F, g or G it forces the written output to contain a decimal point even if no more digits follow. By default, if no digits follow, no decimal point is written. |

0 | Left-pads the number with zeroes (0) instead of spaces when padding is specified (see width sub-specifier). |

Width

| Width | Description |

|---|---|

| number | Minimum number of characters to be printed. If the value to be printed is shorter than this number, the result is padded with blank spaces. The value is not truncated even if the result is larger. |

* | The width is not specified in the format string, but taken from the next variadic argument from printf(). |

Precision

| .precision | Description |

|---|---|

| .number | For integer specifiers (d, i, o, u, x, X): precision specifies the minimum number of digits to be written. If the value to written is shorter than this number, the result is padded with leading zeros. The value is not truncated even if the result is longer. A precision of 0 means that no character is written for the value 0. For a, A, e, E, f and F specifiers: this is the number of digits to be printed after the decimal point (by default, this is 6). For g and G specifiers: This is the maximum number of significant digits to be printed. For s: this is the maximum number of characters to be printed. By default all characters are printed until the ending null character is encountered. If the period is specified without an explicit value for precision, 0 is assumed. |

.* | The precision is not specified in the format string, but taken from the next variadic argument from printf(). |

Length

| Type Specifier | |||||||

|---|---|---|---|---|---|---|---|

| Length Modifier | d, i |

u, o, x, X |

f, F, e, E, g, G, a, A |

c |

s |

p |

n |

| (none) | int |

unsigned int |

double |

int |

char* |

void* |

int* |

hh |

signed char |

unsigned char |

signed char* |

||||

h |

short int |

unsigned short int |

short int* |

||||

l |

long int |

unsigned long int |

wint_t |

wchar_t* |

long int* |

||

ll |

long long int |

unsigned long long int |

long long int* |

||||

j |

intmax_t |

uintmax_t |

intmax_t |

||||

z |

size_t |

size_t |

size_t |

||||

t |

ptrdiff_t |

ptrdiff_t |

ptrdiff_t |

||||

L |

long double |

||||||

Input

We're now going to discuss how to take user input from the standard input (stdin) in C.

scanf

scanf() allows you to read different data types from the user's input and store them in variables, making it a versatile function for interactive applications. It enables you to control the input format, extract data from the input stream, and store it in the appropriate variables.

Signature

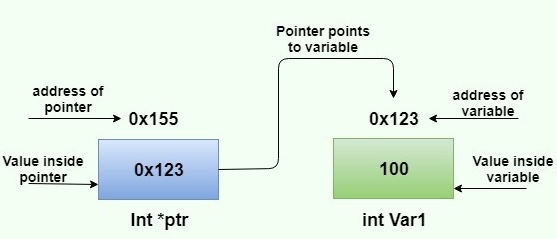

The general signature of scanf() is similar to printf(), it takes a format string indicating the format of the input sequence and a variadic list of addresses (pointers)where values are stores. scanf()'s format string uses a similar format specification to printf() however, the variable specifiers (denoted with %) indicate values that are to be read in and stored in program variables. As we are reading values in with scanf(), when we wish to store a variable we must pass the address of the variable we want to store the value in. This is because variables have copy semantics in C meaning we cannot pass a variable to a function and get the modified value with returning it or using a pointer. scanf() uses pointers.

scanf(char* fmtstring, ...);

Example

This simple program is similar to the printf() example we saw earlier in the book except, instead of hard coding values we are able to take input from the user in the form x + y and the program will return the result.

#include <stdio.h>

int main()

{

double a = 0.0;

double b = 0.0;

double sum = 0.0;

printf(">> ")

scanf("%f + %f", &a, &b);

sum = a + b;

printf("%f", sum);

return 0;

}

Formatting Specification

The format specification is a mirror to that used by printf() (there are many _f() functions in the C standard library that all use similar format specifications) as values being read in must conform to a given C type. This allows us to easily convert the incoming string data as int, double or even substrings.

The general format specification is as below:

_%\[width\]\[.precision\]\[length\]type-specifier_

There are a variety of different options for each part of the specification. Below is a series of tables breaking down the various options for each sub-specifier but note that only type-specifier is needed, the others are optional.

Type Specifiers

| Type Specifier | Type | Example |

|---|---|---|

% | Two sequential % will result in a single % being printed. | % |

d or i | Signed Decimal Integer | 392 |

u | Unsigned Decimal Integer | 7235 |

o | Unsigned Octal Integer | 610 |

x or X | Unsigned Hexadecimal Integer (X: uppercase variant) | 7fa or 7FA |

f or F | Decimal Floating Point (F: uppercase variant for special numbers eg. nan vs NAN) | 392.65 |

e or E | Scientific Notation (mantissa and exponent) (E: uppercase variant) | 3.9265e+2 or 3.9265E+2 |

g or G | Use the shortest representation: %e or %f (G: uses uppercase variants) | 7fa or 7Fa |

a or A | Hexadecimal Floating Point (A: uppercase variant) | 7fa or 7Fa |

c | Character | a |

s | String | example |

p | Pointer Address | 0x7ffce531691c |

n | Prints nothing. The argument corresponding to this specifier must be pointer to a signed integer. Stores the number of character read so far. | |

[set] | Matches a non-empty sequence of character from set of characters. If the first character of the set is ^, then all characters not in the set are matched. If the set begins with ] or ^] then the ] character is also included into the set. It is implementation-defined whether the character - in the non-initial position in the scanset may be indicating a range, as in [0-9]. If a width specifier is used, matches only up to width. Always stores a null character in addition to the characters matched (so the argument array must have room for at least width+1 characters) | |

* | Assignment-suppressing character. If this option is present, the function does not assign the result of the conversion to any receiving argument (optional). |

Width

| Width | Description |

|---|---|

| number | Minimum number of characters to be printed. If the value to be printed is shorter than this number, the result is padded with blank spaces. The value is not truncated even if the result is larger. |

Precision

| .precision | Description |

|---|---|

| .number | For integer specifiers (d, i, o, u, x, X): precision specifies the minimum number of digits to be read. If the value read is shorter than this number, the result is padded with leading zeros. The value is not truncated even if the result is longer. A precision of 0 means that no character is written for the value 0. For a, A, e, E, f and F specifiers: this is the number of digits to be printed after the decimal point (by default, this is 6). For g and G specifiers: This is the maximum number of significant digits to be printed. For s: this is the maximum number of characters to be printed. By default all characters are printed until the ending null character is encountered. If the period is specified without an explicit value for precision, 0 is assumed. |

Length

| Type Specifier | |||||||

|---|---|---|---|---|---|---|---|

| Length Modifier | d, i |

u, o, x, X |

f, F, e, E, g, G, a, A |

c |

s |

p |

n |

| (none) | int |

unsigned int |

double |

int |

char* |

void* |

int* |

hh |

signed char |

unsigned char |

signed char* |

||||

h |

short int |

unsigned short int |

short int* |

||||

l |

long int |

unsigned long int |

wint_t |

wchar_t* |

long int* |

||

ll |

long long int |

unsigned long long int |

long long int* |

||||

j |

intmax_t |

uintmax_t |

intmax_t |

||||

z |

size_t |

size_t |

size_t |

||||

t |

ptrdiff_t |

ptrdiff_t |

ptrdiff_t |

||||

L |

long double |

||||||

Arrays & Strings

There are two vital data types we haven't formally looked at yet. These are the string and array data types. These are integral to building collections of data and being able to store large chunks of data in a single variable.

Strings

What are strings? Strings are a sequence bytes represented as a collection of characters (chars) that (typically) end in a null-string-terminator. Strings are the primary type used to interact with any form of IO with all forms of data being serialized to and from strings. C doesn't have a dedicated type for strings. This is because strings are just a collection of char and this can simply be represented as a contiguous block of memory interpreted as char. To create a block of char, use the [] initialiser after the variable name. This will create a block that is the same size as its initialiser string. String literals are introduced using double quotes. eg.:

char str[] = "Hello";

Note:

- Unlike some languages; like Python, there is a big difference between single quotes (

'') and double quotes (""). Single quotes are exclusive to character types while strings are always double quotes, even if they only store a single character.- If you have intellisense and hover over a string literal you might notice it states its size as one more then the number of characters actually in the string. This is because all string literals have an invisible character

'\0'called the null-terminator which is used to denote the end.

Arrays

Strings are not the only collection type; in fact, they are a specialisation of a more generic structure in C called arrays. Arrays represent a contiguous sequence of elements, all of which must be of the same type. Arrays also must have a known size at compile time meaning they cannot be dynamically resized. Elements of an array are accessed using the subscript operator [] which takes a 0-based index. Arrays in C are very primitive and are designed to be a close analogy to a machine memory. Array types are any variable name suffixed with []. The size of the array can be explicitly set by passed an unsigned integral constant to the square brackets however, if the initial size is known then the size can be elided. Arrays are initialised using an initialiser list which are a comma separated list of values surrounded in braces ({}) with strings being the only exception.

Note: Because there are no complex types in C, strings are just an array of

char.

#include <stdio.h>

int main()

{

int a[] = { 1, 2, 3, 4 };

char b[5] = { 'H', 'e', 'l', 'l', 'o' };

printf("{ %d, ", a[0]);

printf("%d, ", a[1]);

printf("%d, ", a[2]);

printf("%d }\n", a[3]);

printf("\"%c", b[0]);

printf("%c", b[1]);

printf("%c", b[2]);

printf("%c", b[3]);

printf("%c\"\n", b[4]);

return 0;

}

Control Flow

Control flow is an integral part of any computer program. They allow use to change which parts of a program run at runtime. C features three main categories of control flow, the first being if statements and its extensions which are the most common type of control flow used in C. The other two are switch statements and the ternary operator which provide slightly different semantics to their if counterparts.

if Statements

if statements are the most primitive form of control flow in programming. In essence, some block of code is isolated from the rest of the program, protected by some Boolean expression. If the Boolean expression evaluates as truth then the block of code is executed. In C the keyword if is used to introduce an if clause. This is the part of the statement that contains a Boolean expression (called a redicate) which is evaluated on arrival. The rest of the if statement is a block of code nested in braces which only executes when the if clause is true.

#include <stdio.h>

int main()

{

int a = 4;

if (a > 5)

{

puts("a > 5");

}

return 0;

}

What do you think the output of the above code is?

else Statements

Often an if statement on its own is not enough because there will always be two potential outcomes of the Boolean predicate the true and the false branches and we will often want to handle the case when the predicate fails. This is where an else statement comes in. else statements have no predicate clause as it is bound to the alternative outcome of an if clause. C uses the else keyword to introduce the else statement which is just another code block surrounded in braces.

#include <stdio.h>

int main()

{

int a = 4;

if (a > 5)

{

puts("a > 4");

}

else

{

puts("a <= 4");

}

return 0;

}

Note:

The placement of braces in C is not strict ie. the above can be written as:

#include <stdio.h> int main() { int a = 4; if (a > 5) { puts("a > 4"); } else { puts("a <= 4"); } return 0; }

else-if Clauses

C allows use to extend the usage of else statements with additional if clauses. This allows you to form an else-if clause which allows you to test multiple predicates and select only one block of code to execute. These additional clauses are called branches of the program as the line of execution can differ depending on runtime conditions.

#include <stdio.h>

int main()

{

int a = 4;

if (a > 5)

{

puts("a > 4");

}

else if (a == 4)

{

puts("a == 4");

}

else

{

puts("a < 4");

}

return 0;

}

Note:

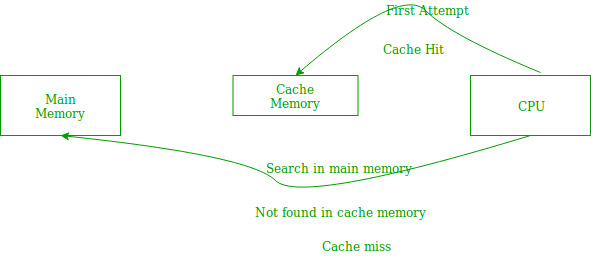

Inefficient usage of branching constructs can cause massive slow downs of many systems at large scales due to a CPU level optimisation called branch prediction which tries to 'predict' which branch is most likely to occur and load the instructions corresponding to its code block into the cache ahead of time. However, a large number of branches increases the chance of these algorithms being incorrect which can lead to a cache miss which involves the CPU having to wipe the cache of the prefetched instructions and then lookup and load the correct instructions which can be expensive if the branching code runs a lot.

switch Statements

The other main control flow construct in C is called the switch statement. These take an integral value and matches it against a particular case for which it is equal and executes the corresponding code block. While similar are to if statements, switch statements differ in a subtle way. switch statements allow for fallthrough which means that the line of execution will continue through different cases if the switch statement is not broken out of using a break statement. The most common use of switch statements is with enums as they allow you to use an enum to represent a finite list of possible states and handle each case accordingly. switch statements can also have a default case to handle any uncaught matches.

#include <stdio.h>

enum cmp_result_t { UNDEF, EQUAL, LESS, GREATER };

int main()

{

int a = 4;

cmp_result_t cmp_r = UNDEF;

if (a > 5)

{

cmp_r = GREATER;

}

else if (a == 4)

{

cmp_r = EQUAL;

}

else

{

cmp_r = LESS;

}

switch (cmp_r)

{

case EQUAL:

puts("equal");

break;

case LESS:

puts("less");

break;

case GREATER:

puts("greater");

break;

default:

puts("NaN");

break;

}

return 0;

}

Ternary Operator

The final control flow construction is the ternary operator. This is a condensed if statement that is able to return a value. It involves a Boolean predicate followed by two expressions that may return or have side effects (ie. print something). The ternary operator comprises of the symbol ?: where ? is used to separate the predicate and branches and : is used to separate the branches.

#include <stdio.h>

int main()

{

int a = 4;

a > 4 ? puts("a > 4") : puts("a <= 4");

int b = a > 4 ? a + 5 : a * 100;

printf("%d\n", b);

return 0;

}

Loops

Loops area another integral construct in almost every programming language. They allow us easily and efficiently express code that we want to repeat. Loops generally execute while a particular predicate is upheld. Loops are essential to programming generic algorithms that operate on constructs that have a varying number of elements such as arrays.

while Loops

The most primitive of any loop is the while loop. As its name suggests a while loop will execute 'while' a particular predicate is still true. while loops have a similar syntax to if statements. Loops are often paired with an integral value indicating the current state of the loop. Because C loops are primitive and close analogies for the eventual assembly language they do not automatically track the state of the integral meaning you have to manually update its state.

#include <stdio.h>

int main()

{

int i = 0;

while (i < 5)

{

printf("%d\n", i);

i++;

}

return 0;

}

do-while Loops

do-while loops are similar to while except that the body of the loop is guaranteed to execute at least once. This is because; unlike while loops, the predicate is checked at the end of each loop not the beginning.

#include <stdio.h>

int main()

{

int i = 0;

do

{

printf("%d\n", i); //< But this still runs once

} while (i < 0); //< Will never be true ^

return 0;

}

for Loops

While while loops will run while a predicate is true which can potentially be 'infinite', for loops differ slightly in their semantics. for loops typically run for a particular number of iterations and are usually used with arrays to efficiently perform operations on the entire array. for loops have three key sub-statements that control its execution. The first is a statement used to initialise a variable to represent the current state. The second is the predicate the controls whether the loops continues and the final one is a post-loop expression that runs at the end of each iteration and is often used to increment or modify the current state integral. Each of these statements are separated by a ; in the clause (parenthesis) of the for loop.

#include <stdio.h>

int main()

{

int a[] = { 1, 2, 3, 4, 5 };

for (int i = 0; i < sizeof(a) / sizeof(a[0]); ++i)

{

printf("%d\n", a[i]);

}

return 0;

}

Note:

- Any loop can be exited early using a

breakstatement.- C doesn't have a function to retrieve the size of an array. However, we can use the

sizeofoperator to retrieve the total number of bytes the entire array occupies and then divide it by the size of one of the elements. This works because each element in an array are contiguous and aligned and thus it is easy to determine the number of bytes to jump for each element and because each element is the same size (type) then the total number of bytes is the array size types the size of each element.

Functions

The final core construction in C is functions. Functions are the most basic form of abstraction in all of computing. They allow us isolate and organise code into a common pattern that we can utilise and execute as we need.

What is a function?

Functions in C, while called functions, are more like sub-routines, a packaged piece of executable code, almost like a mini program we can use in a larger context. The reason I mention this is because functions in C are not pure functions ie. they are not as sound as mathematical functions, they're more hacky then that. This is largely because functions in C can have side effects and we have actually seen this a lot already. The difference between a pure function and a subroutine is that a function takes some input data called a parameter, argument or point (or tuple of data if multiple input points are needed) and returns another value. There is a clear pipeline of inputs and outputs to a function of this nature; think of an add (+) function, two arguments are given and a single value is returned. Side effects are operations which have an effect on something outside the function without communicating that information in its input or output. Be best example of side effects are any IO function like puts() or printf(). These are functions by the C standard however, notice how we never took into account that we don't capture a return value from printf() but it still printed to the screen or even the possibility that printf() may have returned something and printf() does. In fact it returns the number of characters written to its output stream (standard out - stdout) and this is where the issue arises. By the definition of a function we described above, printf() is more like a character counter function after formatting as it inputs are just a string and a variable number of additional points and it returns the number of characters of the final formatted stream. Where is the information encoding the interaction is has with the screen? And thats just it, it doesn't. This is called a side effect, behaviour that is not defined or encoding in the information of the function. C functions have the capabilities to be pure but can also have side effects and this is what makes C functions more akin to sub-routines however, while this difference is good to know they are used like functions in other languages.

#include <stdio.h>

int main()

{

int a = 5;

double b = 365.57;

unsigned sz = printf("%d + %f = %f\n", a, b, a + b);

printf("%u\n", sz);

return 0;

}

Function Signatures & Definitions

Functions in C have a particular signature. This is used to differentiate functions from each other. The key part of a signature is the functions name. This is used to call or refer to the function. In C there can be no duplicate functions meaning every function name must be unique, at least in the current translation unit (file). This includes name imported from header files (eg. <stdio.h>). A functions signature is also made up from the type of its input points and the return type. In general functions are declared first by their return type followed by their name. The points of a function are specified in a comma separated list surrounded in a parenthesis. Each point is a type declaration followed by a name, identical syntax to variable declarations. The body of a function is defined like other C constructions, using braces. Function bodies must contain a return statement which returns a value of the same type as the functions return type. Functions are also able to return void meaning that the function doesn't return anything. They are also able to take no input points.

int f(int a, double b)

{

/// ....

}

Note:

The declaration (signature) of a function can be declared separately (in a header file) from its definition (signature and body defined in a corresponding source file). If the declaration and definition are separated then the declaration replaces the braced code block with a semicolon

;at the end of the signature's line. eg:int f(int a, double b);

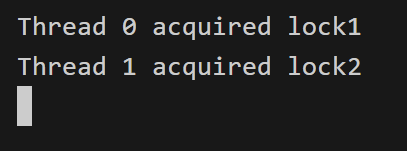

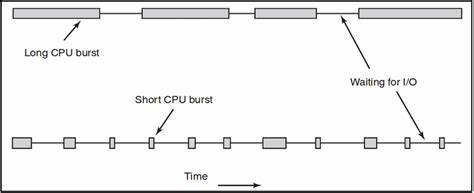

Calling Functions